The Ethical Implications Of AI

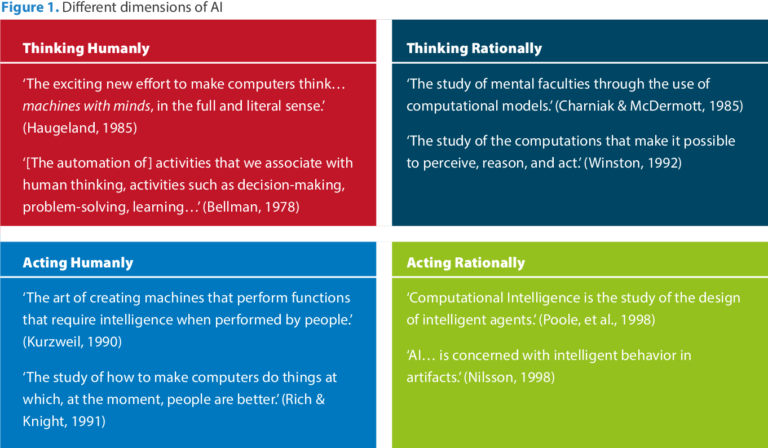

In today’s rapidly advancing technological era, the emergence of Artificial Intelligence (AI) has undoubtedly transformed various aspects of our lives. However, as AI becomes more sophisticated and integrated into our daily routines, it raises important ethical questions that demand our attention. The potential consequences and moral dilemmas that arise from the development and implementation of AI systems have sparked an ongoing debate, making it crucial for us to explore the ethical implications of AI and how they can shape our future society.

Ethics in AI development

Issues in AI development

The development of artificial intelligence (AI) brings forth a range of ethical concerns that need to be carefully addressed. One of the foremost issues in AI development is the potential for bias and discrimination. As AI systems are trained on large datasets, they have the potential to inherit the biases present in the data, leading to discriminatory outcomes. This poses a significant ethical challenge that must be addressed to ensure fairness and equality in AI applications.

Bias and discrimination

Bias in AI can manifest in various ways, such as gender bias, racial bias, or bias against specific groups of people. For example, facial recognition systems have been found to exhibit bias by misidentifying individuals from ethnic minority backgrounds at higher rates than those from majority groups. This can have serious consequences, leading to false accusations or unjust treatment. To address this issue, it is essential to develop AI algorithms that are unbiased and inclusive, ensuring fair outcomes for all individuals.

Accountability and transparency

Another crucial aspect of ethical AI development is accountability and transparency. As AI systems become increasingly complex and autonomous, it becomes difficult to determine how decisions are made and who is responsible for them. This lack of transparency raises concerns about potential harm caused by AI systems and the ability to hold developers and users accountable for their actions. Establishing clear accountability frameworks and ensuring transparency in the development and implementation of AI is vital to maintain trust and ethical standards.

Data privacy and security

AI development heavily relies on vast amounts of data, raising concerns about data privacy and security. With AI systems processing and analyzing personal information, there exists a risk of unauthorized access or misuse of sensitive data. Protecting individuals’ privacy while harnessing the power of AI is paramount. Implementing robust data privacy measures, including encryption, secure storage, and stringent access controls, is essential to minimize the risks and ensure the ethical use of AI technology.

Addressing ethical concerns in AI

Developing ethical guidelines

To tackle the ethical concerns surrounding AI, the development of comprehensive ethical guidelines is crucial. These guidelines should outline the principles and values that AI developers and users should adhere to, promoting ethical decision-making and responsible AI practices. Such guidelines can provide a framework for addressing pressing ethical dilemmas and ensure the responsible and ethical development and deployment of AI technologies.

Ensuring diversity and inclusivity

Promoting diversity and inclusivity in AI development is essential to address inherent biases and discrimination. By fostering diverse teams of AI researchers and developers, different perspectives and experiences can be incorporated into the design and development process. This helps mitigate biases and ensures that the AI systems created are fair and inclusive, catering to the needs of diverse populations.

Ethical considerations in AI governance

Effective AI governance is vital to address ethical concerns. Governance frameworks need to be established to oversee the development, deployment, and use of AI systems. These frameworks should include mechanisms for ethical review, accountability, and oversight. Additionally, involving various stakeholders, including experts, policymakers, and members of the public, in AI governance can help ensure that ethical considerations are adequately addressed.

Algorithmic transparency and explainability

Enhancing algorithmic transparency and explainability is crucial for addressing ethical concerns in AI. Understanding how AI systems arrive at decisions is important to detect biases, assess fairness, and ensure accountability. Transparent and explainable algorithms can enable users, policymakers, and affected individuals to comprehend the decision-making processes of AI systems, fostering trust and enabling the identification and mitigation of potential ethical issues.

Artificial Intelligence and job displacement

Automation’s impact on employment

The rise of AI and automation has raised concerns about the potential displacement of human workers. As AI technologies automate tasks and processes traditionally performed by humans, there is a fear that jobs will become obsolete, leading to unemployment and economic instability. It is essential to understand the impact of AI on employment and proactively address the potential challenges associated with job displacement.

Reskilling and upskilling the workforce

To mitigate the negative consequences of job displacement, reskilling and upskilling the workforce is crucial. By equipping individuals with the skills needed to adapt to the changing job landscape, they can transition into new roles that complement AI technologies. Investing in education and training programs that focus on developing skills that are difficult to automate, such as creativity, emotional intelligence, and problem-solving, can help ensure smooth workforce transitions and minimize the social and economic impact of job displacement.

Social and economic consequences

The displacement of human workers by AI technologies can have significant social and economic consequences. Income inequality may increase, with AI benefiting certain sectors or individuals more than others. Additionally, job losses can lead to social unrest and a decrease in the overall well-being of affected communities. To address these challenges, it is essential to consider the broader social and economic implications of AI deployment and implement policies that promote fairness, inclusivity, and support for affected individuals and communities.

Reevaluating the value of work

As AI systems take over repetitive and mundane tasks, there is an opportunity to redefine the value of work. With more time freed up by automation, individuals can focus on tasks that require creativity, empathy, and human interaction. This shift in the nature of work calls for a reevaluation of societal norms and the recognition of the value of human skills that are uniquely human. Emphasizing the importance of human-centric work can lead to a more fulfilling and purpose-driven society.

AI and human decision-making

Autonomous decision-making systems

The increasing autonomy of AI systems raises questions about the role of humans in decision-making processes. As AI becomes more advanced, there is a potential for autonomous decision-making, where AI systems make decisions without human intervention. This poses ethical concerns as it removes human agency and raises questions about responsibility and accountability in decision-making processes.

Moral responsibility and accountability

While AI systems can make decisions based on predefined rules or machine learning algorithms, the ultimate responsibility for the outcomes of those decisions lies with humans. Establishing clear lines of moral responsibility and accountability is crucial to ensure that AI systems are ethically developed and used. AI developers, organizations, and policymakers should be held accountable for the actions and consequences of AI systems, and mechanisms should be in place to address any ethical lapses.

Supplementing or replacing human judgment

AI technologies have the potential to either supplement or replace human judgment in decision-making processes. While AI can provide valuable insights and assist in complex decision-making, it is important to strike a balance between human judgment and AI-driven recommendations. Blindly relying on AI systems without considering their limitations can lead to ethical issues and potential harm. It is crucial to ensure that AI systems are used as tools to augment human decision-making rather than replacing it entirely.

Ensuring human oversight

To maintain ethical decision-making processes involving AI, human oversight is essential. Humans should have the ability to review, question, and intervene in AI-driven decisions when necessary. Implementing mechanisms for ongoing monitoring, auditing, and evaluation of AI systems can help ensure that they align with ethical guidelines and values. Human oversight is crucial in identifying and rectifying biases, addressing unintended consequences, and maintaining ethical standards throughout the development and deployment of AI technologies.

AI and privacy concerns

Data collection and surveillance

The widespread adoption of AI technology often involves extensive data collection and surveillance, raising concerns about privacy. AI systems rely on vast amounts of data to function effectively, but the collection of personal and sensitive information can compromise individuals’ privacy rights. Striking a balance between data collection for the purposes of AI development and the protection of individuals’ privacy is essential. Implementing strict data protection measures, including anonymization and secure data management, is necessary to address privacy concerns in AI.

Intrusion into personal lives

As AI technologies become more pervasive, there is a growing concern about the intrusion into personal lives. AI systems have the potential to gather detailed information about individuals’ preferences, behaviors, and even thoughts, leading to potential privacy violations. Respecting individuals’ autonomy, consent, and privacy rights should be a priority in AI development. Transparency in data collection practices and providing individuals with meaningful control over their personal data can help mitigate these concerns.

Data ownership and consent

AI development raises questions about data ownership and consent. Individuals may unknowingly contribute their data to AI systems through online interactions, social media, or other platforms. It is crucial to establish clear guidelines on ownership and consent, ensuring that individuals have the right to control how their data is used and shared. Implementing transparent data collection practices and obtaining informed consent can help address concerns around data ownership and ensure ethical AI development.

Minimizing privacy risks

To effectively address privacy concerns in AI, it is essential to adopt privacy by design principles. Privacy considerations should be integrated into the design and development of AI systems from the outset. Implementing robust data protection measures, conducting privacy impact assessments, and fostering a culture of privacy awareness and accountability can help minimize privacy risks. By prioritizing privacy in AI development, it is possible to harness the benefits of AI while respecting individuals’ privacy rights.

Ethics of AI in healthcare

Patient data analysis

AI has the potential to revolutionize healthcare by analyzing vast amounts of patient data and providing valuable insights. However, the use of personal health information in AI systems raises ethical concerns. Safeguarding patient privacy and ensuring the secure handling of sensitive medical data is crucial. Adhering to strict data protection regulations, implementing robust security measures, and obtaining informed consent are essential to address ethical concerns related to patient data analysis in healthcare AI.

Medical diagnosis and decision-making

AI technologies can assist in medical diagnosis and decision-making, improving accuracy and efficiency. However, concerns arise when AI systems are solely relied upon for critical healthcare decisions. The potential for biases or errors in AI algorithms can lead to misdiagnosis or inappropriate treatment plans. To address these concerns, it is important to ensure that AI systems are effectively validated, transparent, and explainable. Integrating human expertise and oversight is crucial to ensure the highest standard of care in medical diagnosis using AI.

Risk of biased algorithms

The risk of biased algorithms in healthcare AI poses significant ethical challenges. Biased algorithms can lead to disparate healthcare outcomes for different patient populations, exacerbating existing healthcare disparities. It is important to thoroughly vet and test AI algorithms for biases and ensure that they are fair and inclusive. Initiating diverse datasets that encompass a broad range of demographics and continuous monitoring of algorithm performance can help mitigate the risks of biased healthcare AI.

Doctor-patient relationship

The introduction of AI in healthcare raises questions about the doctor-patient relationship. While AI can provide valuable insights and support doctors in diagnosis and treatment decisions, it is essential to maintain the trust and human connection between healthcare providers and patients. Clear communication about the role of AI, fostering empathy and understanding, and ensuring that AI is used as a complementary tool rather than a replacement for human interaction can help preserve the doctor-patient relationship while leveraging the benefits of AI technology in healthcare.

AI and inequality

Amplifying existing inequalities

The deployment of AI can potentially amplify existing inequalities in society. AI algorithms trained on biased data can perpetuate discrimination, exacerbating social disparities. It is crucial to proactively address these biases and ensure that AI systems are fair and inclusive. Implementing measures to mitigate biases and conducting regular audits and evaluations of AI systems can help prevent the amplification of existing inequalities.

Digital divide and access to AI

The digital divide refers to the unequal access to technology and digital resources. The adoption and benefits of AI can further widen this divide if not addressed appropriately. Ensuring equitable access to AI technologies, including affordable internet access, and providing training and support for marginalized communities, can help bridge the digital divide. Increasing digital literacy and fostering inclusivity in AI development and deployment are essential for reducing inequality.

Fair distribution of AI benefits

As AI technologies advance, it is important to address the distribution of benefits. The benefits derived from AI should not be disproportionately accrued by a select few or concentrated in specific industries or regions. Policies should be established to ensure fair distribution of AI benefits across society. This includes promoting initiatives that create opportunities for all, ensuring that the advantages of AI are accessible to individuals from all walks of life and reducing social and economic disparities.

Mitigating social disparities

AI has the potential to address social disparities and improve the quality of life for marginalized communities. However, it is essential to be mindful of the unintended consequences that AI deployment can have on these communities. Mitigating social disparities requires a comprehensive approach that involves active engagement with affected communities, addressing bias and discrimination, and prioritizing inclusive AI development. Only through a concerted effort can AI be effectively harnessed to reduce social inequalities.

AI and autonomous weapons

Lethal autonomous weapons systems

The development and deployment of autonomous weapons raise significant ethical concerns. Lethal autonomous weapons systems, capable of selecting and engaging targets without human intervention, pose serious dangers. The lack of human control in decision-making processes can lead to unintended harm, loss of life, and escalation of conflicts. The ethical implications of autonomous weapons systems necessitate robust international regulations to ensure human control and prevent the misuse of technology in warfare.

Ethical considerations in warfare

Warfare involving AI technologies raises unprecedented ethical considerations. The use of AI in military operations must adhere to ethical principles, including proportionality, distinction, and the protection of civilians. AI systems should not be used to intentionally cause harm or violate human rights. Strong ethical frameworks and international agreements need to be in place to govern the development, deployment, and use of AI in warfare, ensuring that military actions align with humanitarian norms and principles.

Human control and accountability

To address ethical concerns surrounding autonomous weapons, maintaining human control is essential. Humans should have the ultimate authority and responsibility in decision-making processes that involve the use of lethal force. Algorithms and AI systems should be designed with strict limitations to prevent unauthorized actions or misuses. Establishing clear lines of accountability and legal frameworks can ensure that individuals and organizations using AI in warfare are held responsible for their actions.

Impacts on international security

The introduction of AI technologies in warfare has significant implications for international security. The rapid advancements in AI can potentially disrupt the balance of power and give certain countries or organizations an advantage in military capabilities. It is essential to foster international collaborations and agreements to address security concerns associated with AI. Promoting transparency, fostering trust, and establishing mechanisms for dialogue and cooperation can help mitigate potential security risks and ensure global stability.

AI and the environment

Energy consumption and carbon footprint

The development and deployment of AI technologies have a notable environmental impact, primarily due to high energy consumption. Training and running AI models require substantial computational resources, contributing to increased carbon emissions and energy consumption. To address this concern, researchers and developers should focus on developing energy-efficient algorithms and hardware. Embracing sustainable practices and utilizing renewable energy sources can help minimize the environmental footprint of AI technologies.

Sustainable AI practices

In addition to reducing energy consumption, sustainable AI practices involve considering the entire life cycle of AI systems. This includes responsible sourcing and disposal of hardware, minimizing e-waste, and employing energy-efficient infrastructure. Adopting sustainable practices in AI development and implementation can contribute to reducing the environmental impact and ensuring that AI technologies align with broader sustainability goals.

Environmental impact assessments

Conducting comprehensive environmental impact assessments for AI projects is essential to understand and mitigate their potential negative effects. Assessments should consider the entire life cycle of AI systems, from raw material extraction to disposal. Identifying potential environmental risks, such as resource depletion or pollution, early in the development process allows for the implementation of mitigation strategies and the adoption of sustainable practices.

Mitigating negative environmental effects

To effectively mitigate the negative environmental effects of AI, it is crucial to integrate environmental considerations into AI development strategies. This involves promoting research and innovation in green AI, developing algorithms that optimize energy consumption, and implementing responsible AI practices. Collaboration between AI researchers, environmental experts, and policymakers is essential to ensure that AI technologies contribute positively to environmental sustainability.

In conclusion, the development and deployment of AI technologies raise a multitude of ethical concerns that cannot be ignored. From bias and discrimination to privacy concerns and impacts on society, addressing these ethical implications requires a comprehensive approach. This involves developing ethical guidelines, promoting transparency and explainability, and ensuring diversity and inclusivity in AI development. It also entails carefully considering the impact on employment, the role of human decision-making, and the importance of privacy in AI applications. By proactively addressing these ethical concerns, we can harness the potential of AI while upholding ethical standards and promoting a fair and inclusive future.