How To Ensure The Safety And Ethics Of AI

In today’s rapidly evolving technological landscape, the ethical and safe implementation of AI has become a pressing concern. With AI infiltrating various aspects of our lives, it is crucial to ensure that its development and application align with ethical principles and prioritize safety. This article explores key strategies and considerations for guaranteeing the responsible use of AI, offering valuable insights for both organizations and individuals seeking to navigate the realm of artificial intelligence with integrity and care.

Understanding the Risks of AI

Artificial Intelligence has undoubtedly revolutionized various sectors, bringing immense benefits and advancements that were once mere imaginations. However, with every new technology, there are potential drawbacks that must be considered. It is crucial to understand and recognize these risks to ensure the responsible and ethical development of AI.

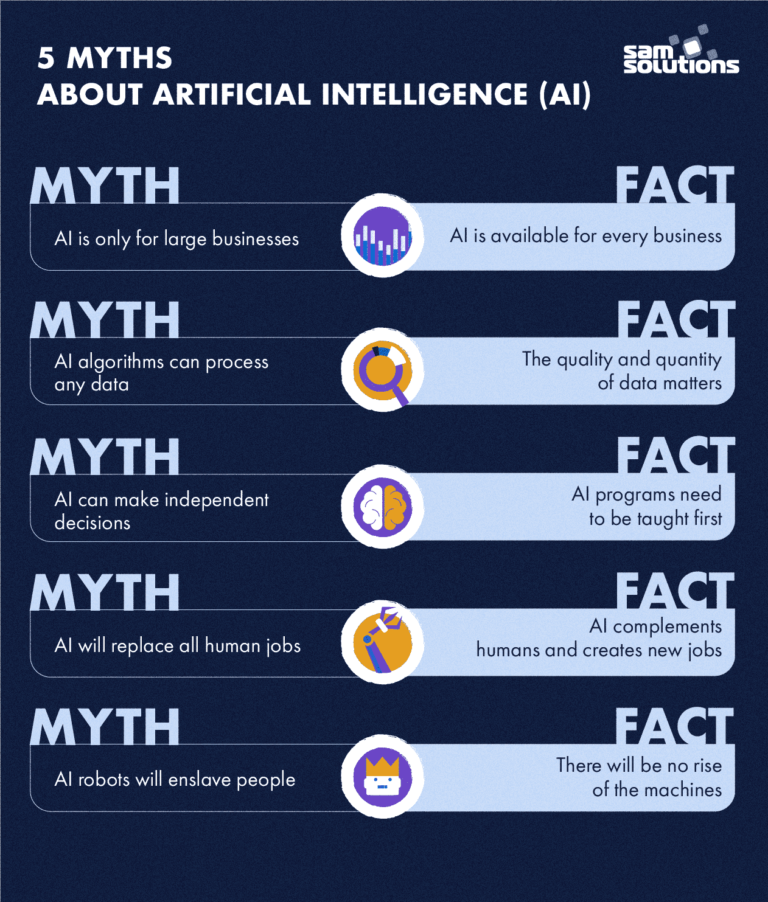

Recognizing potential drawbacks

As powerful as AI may be, there are certain risks and challenges associated with its implementation. One major concern is the potential for job displacement. With the ability to automate several tasks, AI systems can render certain job roles obsolete. Additionally, there is the risk of overreliance on AI, leading to a lack of human judgment and decision-making. Understanding these potential drawbacks helps us address them effectively.

Identifying ethical concerns

Ethical considerations play a pivotal role in the development and deployment of AI systems. AI algorithms, if not designed and implemented ethically, can perpetuate biases, discriminate against certain groups, and invade individual privacy. It is crucial to identify and address these ethical concerns to ensure that AI is developed and utilized in a responsible and empathetic manner.

Establishing Clear Guidelines and Standards

To ensure the safety and ethics of AI, it is imperative to establish clear guidelines and standards that govern its development and use. These guidelines should encompass both global frameworks and industry-specific protocols, providing a comprehensive regulatory framework for AI systems.

Creating international regulatory frameworks

Establishing international regulatory frameworks is essential to harmonize and standardize the ethical development and deployment of AI. These frameworks should dictate the minimum requirements, such as data protection, transparency, and algorithmic accountability. By collaborating on a global scale, we can address cross-border challenges and ensure a consistent ethical approach to AI.

Developing industry-specific guidelines

In addition to global frameworks, industry-specific guidelines are crucial to address the unique ethical concerns and risks associated with particular sectors. Industries such as healthcare, finance, and transportation have their own specific challenges and requirements. Developing guidelines tailored to these industries will provide more targeted solutions and ensure the safety and ethics of AI in their respective domains.

Implementing Transparency and Explainability

One of the key ways to address the risks and ethical concerns of AI is through transparency and explainability. By promoting transparency and enabling access to information and explanations, we can build trust and ensure accountability in AI systems.

Promoting transparency in AI systems

Transparency in AI systems refers to making the development processes, decision-making algorithms, and data sources transparent to users and stakeholders. This transparency allows for better understanding and scrutiny of the AI’s output, ensuring that the system operates within ethical boundaries. Furthermore, transparency enables the detection and mitigation of biases, promoting fairness and inclusivity.

Enabling access to information and explanations

AI systems should provide users with access to information and explanations regarding their decisions and actions. Users should have the ability to understand why an AI system made a particular decision or recommendation. This not only empowers users to make informed choices but also helps identify and rectify any ethical issues that may arise. Enabling access to information and explanations fosters trust and enhances the ethical development of AI.

Ensuring Privacy and Data Security

With the vast amount of data involved in AI systems, ensuring privacy and data security is crucial in protecting personal information and preventing unauthorized access and misuse. Safeguarding individuals’ privacy rights and securing the data used in AI systems are paramount for maintaining public trust and ethical practices.

Protecting personal data

Stringent measures must be implemented to protect personal data throughout the AI lifecycle. Anonymizing and aggregating data, implementing secure storage systems, and obtaining informed consent from individuals are essential steps in protecting privacy. Additionally, complying with data protection regulations and regularly auditing data handling practices will help ensure that personal data is appropriately safeguarded.

Preventing unauthorized access and misuse

Implementing robust security measures is vital in preventing unauthorized access and potential misuse of AI systems. This involves securing both the physical infrastructure and the digital networks through which AI operates. Strict access controls, encryption protocols, and continuous monitoring of system activities are some of the measures that can be implemented to mitigate the risk of unauthorized access and misuse of AI systems.

Addressing Bias and Fairness

Bias in AI algorithms is a significant concern that can perpetuate discrimination, reinforce societal biases, and hinder the fairness and inclusivity of AI systems. Therefore, it is essential to actively detect and mitigate biases to ensure that AI systems are fair, unbiased, and inclusive.

Detecting and mitigating biases in AI algorithms

Developing mechanisms to detect biases in AI algorithms is crucial to address this ethical concern effectively. It is necessary to monitor and evaluate the performance of AI systems for any discriminatory or biased outputs. By actively identifying biases, developers can then work towards amending the algorithms and training data to mitigate these biases and ensure fair outcomes for all individuals.

Ensuring fairness and inclusivity

Fairness in AI means ensuring that the outputs and decisions made by AI systems are free from discrimination and prejudice. Inclusivity involves designing AI algorithms that consider the needs and perspectives of diverse groups. By incorporating fairness and inclusivity into the development process, we can minimize the impact of biases and create AI systems that are ethically sound and beneficial to everyone.

Mitigating the Risk of Unintended Consequences

AI systems, if not carefully monitored and regulated, can have unintended consequences that may pose risks to individuals and society at large. It is imperative to implement impact assessments and continuously monitor and adapt AI systems to mitigate these risks effectively.

Implementing impact assessments

Conducting thorough impact assessments before deploying AI systems can help identify potential risks and unintended consequences. These assessments should evaluate the social, economic, and ethical implications of AI systems. By proactively addressing these concerns, we can design and develop AI systems that align with societal values and minimize any negative effects.

Monitoring and adapting AI systems

Continuous monitoring and adaptation of AI systems are essential to prevent or mitigate any unintended consequences that may arise over time. Ongoing evaluation of system outputs, regular feedback from users, and the ability to adapt algorithms and models are critical in addressing emerging risks and maintaining the ethical standards of AI.

Enhancing Collaboration and Accountability

To ensure the safety and ethics of AI, it is essential to foster collaboration between various disciplines and establish accountability frameworks. By working together and holding all stakeholders accountable, we can ensure that AI development and deployment follows ethical guidelines and prioritizes the well-being of individuals and society.

Fostering interdisciplinary collaboration

AI encompasses various fields, including technology, ethics, law, and social sciences. Fostering interdisciplinary collaboration brings together diverse perspectives and expertise, ensuring a comprehensive approach to AI development. By collaborating across disciplines, we can address ethical concerns, identify potential risks, and incorporate a wider range of viewpoints in shaping the future of AI.

Establishing accountability frameworks

Accountability is a crucial aspect of AI development and deployment. Establishing frameworks that hold developers, organizations, and governments accountable for the ethical and responsible use of AI is essential. This includes ensuring transparency in decision-making processes, defining mechanisms to address grievances, and providing avenues for redress in case of any harm caused by AI systems. By establishing accountability frameworks, we create a sense of responsibility and promote ethical practices.

Promoting AI Governance and Auditing

Governance and auditing of AI systems are necessary to ensure adherence to ethical guidelines and identify any shortcomings or lapses in the development and deployment of AI. Implementing AI governance frameworks and conducting regular audits provide a systematic approach to monitor and regulate AI systems.

Implementing AI governance frameworks

AI governance frameworks outline the rules, policies, and procedures that govern the development, deployment, and use of AI systems. These frameworks should include considerations for ethical principles, transparency, and accountability. By implementing AI governance frameworks, we establish a structured approach to ensure the safety and ethics of AI.

Conducting regular audits of AI systems

Regular audits of AI systems are essential in assessing their compliance with ethical guidelines and identifying any shortcomings or risks. Audits should evaluate various aspects, such as data handling practices, transparency, fairness, and impact on individuals and society. By conducting regular audits, we can proactively address ethical concerns, rectify any issues, and ensure that AI systems align with ethical standards.

Investing in AI Safety Research and Education

To ensure the safety and ethics of AI, it is crucial to invest in research and education specific to AI safety. This includes conducting research on potential risks and challenges associated with AI systems and educating stakeholders about these risks.

Supporting research on AI safety

Research on AI safety is essential in understanding and addressing potential risks and challenges that emerge with the advancement of AI technology. Investing in research enables the development of effective strategies, tools, and frameworks to mitigate these risks. By supporting ongoing research, we can bridge the gap between technological advancements and responsible AI development.

Educating stakeholders on potential risks

Educating stakeholders, including developers, policymakers, and the public, about the potential risks and ethical concerns of AI is crucial. This education should cover not only the technical aspects of AI but also the broader societal implications and impacts. By raising awareness and providing education, we empower individuals and organizations to make informed decisions and contribute to the ethical development and use of AI.

Engaging with Ethical AI Development

To ensure the safety and ethics of AI, it is vital to involve diverse perspectives and consider ethical considerations throughout the entire AI design and development process.

Involving diverse perspectives

Incorporating diverse perspectives is essential to avoid biases and create AI systems that are fair, inclusive, and beneficial to all. This involves engaging individuals from different backgrounds, cultures, and experiences in the design, development, and decision-making processes. By including diverse perspectives, we can minimize the risk of unintentional biases and ensure that AI systems serve the needs of a broad range of individuals.

Ethical considerations in AI design and development

From the outset, ethical considerations should be integrated into the design and development of AI systems. This involves identifying potential risks, addressing biases, and upholding ethical principles such as fairness, transparency, and accountability. By embedding ethics into AI design and development, we create a solid foundation for responsible AI that prioritizes the well-being and interests of individuals and society.

In conclusion, ensuring the safety and ethics of AI requires a comprehensive approach that recognizes the potential risks, establishes clear guidelines and standards, promotes transparency and explainability, safeguards privacy and data security, addresses bias and fairness, mitigates unintended consequences, enhances collaboration and accountability, promotes governance and auditing, invests in AI safety research and education, and engages with ethical AI development. By prioritizing ethical considerations and adhering to these guidelines, we can harness the benefits of AI while mitigating its potential risks, ensuring a future where AI serves humanity ethically and responsibly.