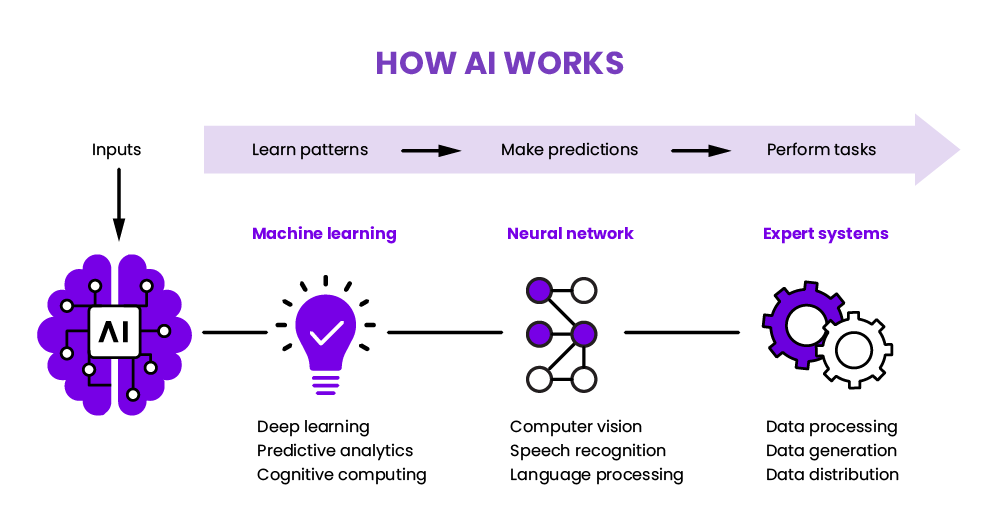

How AI Works

Imagine a world where machines possess the ability to think, learn, and problem-solve, just like humans do. Artificial Intelligence (AI) brings this futuristic dream to life by blending cutting-edge technology with complex algorithms. This captivating article will unveil the inner workings of AI, shedding light on its remarkable abilities and the groundbreaking advancements that have made it a formidable force in our lives. From understanding the basics of neural networks to exploring its everyday applications, get ready to embark on a journey that demystifies the enigma of AI.

This image is property of www.weka.io.

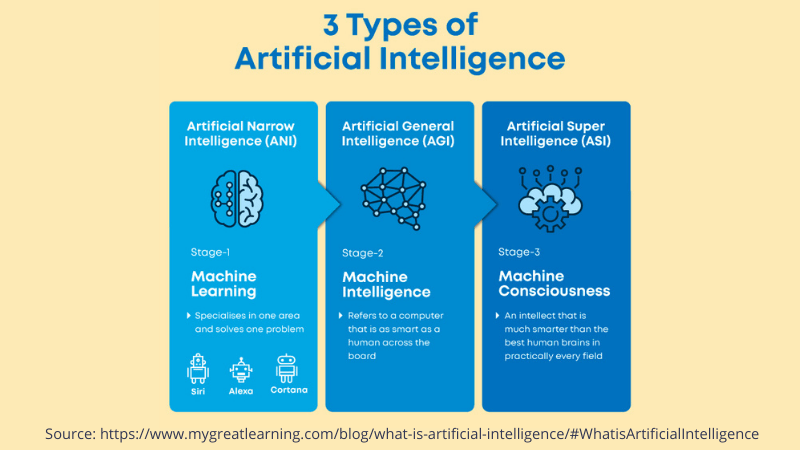

Understanding AI

What is AI?

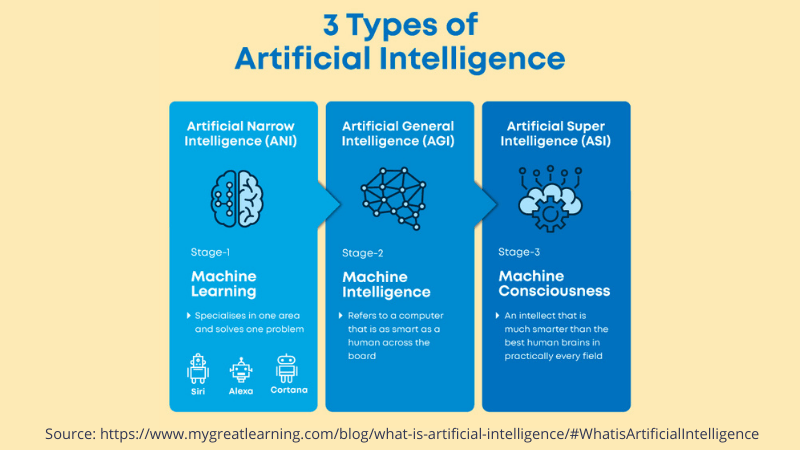

Artificial Intelligence, or AI, refers to the simulation of human intelligence in machines that are programmed to think and learn like humans. It involves the development of computer systems that can perform tasks that would typically require human intelligence, such as visual perception, speech recognition, decision-making, and problem-solving. AI encompasses a wide range of technologies and techniques that enable machines to perform intelligent tasks and improve their performance over time.

AI and Machine Learning

Machine Learning is a subset of AI that focuses on utilizing algorithms and statistical models to enable machines to learn from data without being explicitly programmed. It involves the development of models and algorithms that allow machines to automatically learn and improve from experience or examples. Machine Learning plays a crucial role in AI as it provides the capability for machines to analyze, interpret, and make predictions or decisions based on data.

AI and Deep Learning

Deep Learning is a subfield of Machine Learning that focuses on the development of neural networks with multiple layers to process and learn from complex and large-scale data. It mimics the structure and function of the human brain, allowing machines to extract meaningful patterns and features from raw data. Deep Learning has revolutionized AI by enabling machines to achieve remarkable levels of accuracy and performance in tasks such as image and speech recognition, natural language processing, and autonomous driving.

AI and Natural Language Processing

Natural Language Processing (NLP) is another critical aspect of AI that deals with the interaction between computers and human language. It involves the development of algorithms and models that enable machines to understand, interpret, and generate human language in a way that is both meaningful and contextually relevant. NLP is at the core of applications such as language translation, sentiment analysis, and chatbots, allowing machines to communicate and interact with humans in a more natural and intelligent manner.

The Components of AI

Input

In AI systems, the input refers to the data or information that is fed into the system to initiate the process. This can include various types of data such as text, images, audio, or numerical values. The input serves as the starting point for the AI system to analyze and process in order to generate meaningful insights or outputs.

Data Collection

Data collection is a crucial step in AI as it involves gathering relevant and representative data that can be used to train and improve AI models. This can involve collecting data from various sources such as online databases, sensor devices, social media platforms, or even manually labeling and annotating data. The quality and quantity of the data collected significantly impact the performance and generalization capabilities of AI models.

Pre-Processing

Once the data is collected, it often requires pre-processing to remove noise, inconsistencies, or unwanted information. Pre-processing involves tasks such as cleaning the data, handling missing values, scaling or normalizing the data, and transforming it into a suitable format for further analysis. Pre-processing ensures that the data is in a form that can be effectively utilized by AI algorithms.

Algorithm and Model Selection

Selecting the appropriate algorithms and models is a critical step in AI. Different algorithms and models are designed to solve specific types of problems and handle different types of data. The choice of the algorithm and model depends on factors such as the nature of the problem, the available data, and the desired outputs. The selected algorithm or model acts as the foundation for training and generating predictions or decisions.

Training

Training is a key component of AI where the selected algorithm or model is trained on the collected and pre-processed data. During training, the algorithm learns from the data and adjusts its parameters or weights to minimize errors or maximize performance. Training involves iterative processes such as forward propagation, backpropagation, and optimization techniques to update the model’s parameters based on the input data and desired outputs.

Testing and Evaluation

After the training phase, the AI model needs to be tested and evaluated to assess its performance and generalization capabilities. Testing involves using a separate set of data, known as the testing data, to validate the model’s predictions or decisions. Evaluation metrics such as accuracy, precision, recall, or F1 score are used to measure the model’s performance and compare it against predefined criteria or benchmarks.

Output

The output of an AI system refers to the results, predictions, or decisions generated by the trained model. The output can take various forms depending on the task and application, such as a classification label, a probability score, a text response, an image classification, or a recommendation. The quality and reliability of the output depend on the accuracy and robustness of the AI model as well as the quality of the input data.

This image is property of softwareplanetgroup.co.uk.

Data in AI

The Importance of Data

Data is the foundation of AI as it provides the necessary information for machines to learn, reason, and make informed decisions. It is crucial to have high-quality and relevant data to ensure accurate and reliable AI models. Data plays a vital role in every stage of the AI pipeline, from training and testing to validation and deployment. The availability of diverse and representative data allows AI systems to learn and generalize effectively.

Types of Data

In AI, data can be categorized into different types based on its format and properties. The most common types of data include:

- Numerical data: Data represented as numbers, such as temperature, stock prices, or sensor readings.

- Categorical data: Data that represents qualitative characteristics or attributes, such as colors, categories, or labels.

- Textual data: Data that consists of written or spoken text, such as articles, reviews, or social media posts.

- Image data: Data in the form of visual images or pictures, such as photographs, diagrams, or microscopy images.

- Audio data: Data that represents sound or voice, such as music recordings, speech, or environmental sounds.

Understanding the type of data is essential for selecting appropriate AI techniques and models that can handle and process that specific type of data effectively.

Data Collection Methods

Data collection methods vary depending on the nature of the problem, the type of data required, and the available resources. Some common methods of data collection include:

- Online data collection: Gathering data from online sources such as websites, social media platforms, or online databases.

- Sensor data collection: Collecting data from physical sensors or IoT (Internet of Things) devices, such as temperature sensors, GPS sensors, or accelerometers.

- Manual data collection: Collecting data manually by human annotators or experts, such as labeling images or transcribing audio recordings.

- Survey or questionnaire data collection: Collecting data by designing and administering surveys or questionnaires to gather specific information or opinions.

Each data collection method has its own advantages and challenges, and the choice of method depends on factors such as the data requirements, the desired quality of data, and the available resources.

Data Cleaning and Pre-Processing

Data cleaning and pre-processing are crucial steps in AI as they ensure the quality, consistency, and relevance of the collected data. Data cleaning involves tasks such as removing duplicate or irrelevant data, handling missing values, correcting inconsistencies, or removing outliers. Data pre-processing involves tasks such as scaling or normalizing the data, transforming categorical data into numerical format, or splitting the data into training and testing sets. Data cleaning and pre-processing ensure that the data is ready for analysis and training AI models.

Machine Learning in AI

Supervised Learning

Supervised Learning is a type of Machine Learning that involves training a model on a labeled dataset. In this approach, the input data is accompanied by corresponding target labels, and the model learns to map the input data to the target labels. The trained model can then make predictions or classify new input data based on its learned patterns and relationships. Supervised Learning is commonly used in tasks such as image recognition, text classification, and prediction modeling.

Unsupervised Learning

Unsupervised Learning is a type of Machine Learning where the input data is unlabeled, and the model learns patterns and relationships in the data without explicit target labels. The model identifies clusters, patterns, or hidden structures in the data, allowing for insights and discoveries. Unsupervised Learning is commonly used in tasks such as clustering, dimensionality reduction, and anomaly detection.

Reinforcement Learning

Reinforcement Learning is a type of Machine Learning where an agent learns to make decisions and take actions in an environment to maximize rewards or achieve a specific goal. It involves the interaction between the agent, the environment, and a reward system. The agent learns through trial and error, receiving feedback in the form of rewards or penalties for its actions. Reinforcement Learning is commonly used in autonomous robotics, game playing, and optimization problems.

This image is property of www.customsoft.io.

Deep Learning in AI

Neural Networks

Neural Networks are the building blocks of Deep Learning. They are mathematical models inspired by the structure and function of the human brain. Neural Networks consist of interconnected nodes, called neurons, organized in layers. Each neuron receives input, applies a mathematical transformation, and passes the output to the next layer. By combining multiple layers, Neural Networks can learn and extract complex patterns and features from data.

Architecture

The architecture of a Deep Learning model refers to the arrangement and configuration of its layers and neurons. Deep Learning models can have various architectures, such as feedforward neural networks, recurrent neural networks, or convolutional neural networks. The architecture determines how the model processes and learns from the input data, as well as the complexity and capacity of the model to capture and represent different types of information.

Training and Optimization

Training a Deep Learning model involves feeding it with a large amount of labeled data and adjusting the model’s parameters or weights through an iterative optimization process. The model learns from the data by minimizing a loss function that measures the difference between the predicted outputs and the actual labels. Training and optimization techniques, such as backpropagation and gradient descent, are used to update the model’s parameters and improve its performance over time.

Natural Language Processing in AI

Processing Natural Language

Processing Natural Language involves the understanding, interpretation, and manipulation of human language by machines. Natural Language Processing (NLP) techniques allow machines to analyze and extract meaning from text, speech, or conversation. NLP tasks include tasks such as part-of-speech tagging, named entity recognition, sentiment analysis, and text summarization. NLP enables machines to comprehend and generate human language, opening up avenues for applications such as language translation, chatbots, and voice assistants.

Understanding and Generation

NLP models are designed to understand and generate human language in a way that is contextually relevant and meaningful. Understanding involves tasks such as parsing the structure of sentences, identifying entities or concepts, and extracting sentiment or intent from text. Generation involves tasks such as generating human-like responses, creating coherent and fluent text, or summarizing long documents. NLP models combine techniques such as machine learning, deep learning, and statistical modeling to achieve understanding and generation capabilities.

Applications of NLP

NLP has widespread applications across various domains and industries. Some common applications include:

- Language translation: NLP enables accurate and automatic translation between different languages, removing language barriers and facilitating communication.

- Sentiment analysis: NLP allows machines to analyze and understand the sentiment or emotion expressed in text, helping with tasks such as brand monitoring, customer feedback analysis, or social media sentiment analysis.

- Chatbots and virtual assistants: NLP enables machines to understand and respond to human queries or commands, providing automated customer support, personalized recommendations, or information retrieval.

- Text summarization: NLP techniques can condense long texts or articles into a concise and coherent summary, aiding in information extraction and document summarization.

- Information extraction: NLP models can extract structured information from unstructured text, such as extracting named entities, relationships, or events from news articles or web pages.

This image is property of www.innoplexus.com.

AI Algorithms and Models

Decision Trees

Decision Trees are simple yet powerful algorithms that predict outcomes by creating a tree-like model of decisions and their possible consequences. Each node in the tree represents a decision or a test on an attribute, and the branches represent the possible outcomes or values of the attribute. Decision Trees are widely used in classification and regression tasks due to their interpretability and ease of use.

Random Forests

Random Forests are an ensemble learning method that combines multiple Decision Trees to improve the accuracy and robustness of predictions. Each Decision Tree in the Random Forest is trained on a random subset of the data, and the final prediction is made by aggregating the predictions of all the trees. Random Forests are particularly effective in handling high-dimensional data and reducing overfitting.

Support Vector Machines

Support Vector Machines (SVMs) are a popular supervised learning algorithm used for both classification and regression tasks. SVMs map input data into a high-dimensional feature space, where they find an optimal hyperplane that separates the data into different classes. SVMs are known for their ability to handle complex decision boundaries and generalize well to unseen data.

Naive Bayes Classifier

Naive Bayes Classifier is a simple yet powerful probabilistic classifier based on Bayes’ theorem. It assumes that features are conditionally independent given the class, making it computationally efficient and effective for text classification tasks. Naive Bayes Classifier is widely used in spam filtering, sentiment analysis, and document classification.

Neural Networks

Neural Networks are one of the fundamental AI models that are used across various domains. They are composed of interconnected layers of artificial neurons that process and transform input data. Neural Networks learn by adjusting the weights and biases of the neurons through an iterative optimization process. They have shown remarkable performance in tasks such as image recognition, speech synthesis, and sequence prediction.

Convolutional Neural Networks

Convolutional Neural Networks (CNNs) are a specialized type of Neural Networks that are particularly effective in handling visual data, such as images or videos. CNNs are designed to automatically and hierarchically learn local patterns and spatial structures present in the input data. They consist of convolutional layers, pooling layers, and fully connected layers, which allow them to extract high-level features and make accurate predictions in tasks such as image classification, object detection, and semantic segmentation.

Recurrent Neural Networks

Recurrent Neural Networks (RNNs) are a type of Neural Networks that are designed to handle sequential or time-series data, such as speech, text, or sensor data. RNNs have feedback connections that allow information to persist and pass from one step to another, enabling them to capture temporal dependencies and context in the input data. RNNs are widely used in tasks such as natural language processing, speech recognition, and machine translation.

Training and Testing

Training Data

Training data is used to train AI models by providing input samples along with the corresponding output or target labels. The training data needs to be representative and diverse to ensure that the model can learn and generalize effectively. The quality and quantity of the training data significantly impact the performance and accuracy of the trained model.

Validation Data

Validation data is used to evaluate the performance of the trained model during the training process. It allows for monitoring and fine-tuning the model’s hyperparameters or configuration to improve its performance. The validation data is separate from the training data and is not used for training the model, ensuring an unbiased evaluation of the model’s generalization capabilities.

Testing Data

Testing data is used to assess the final performance and accuracy of the trained model after it has been trained and optimized using the training data. The testing data is independent of the training and validation data, and it provides an unbiased evaluation of the model’s ability to make predictions or decisions on unseen data. Testing data helps to assess the model’s generalization capabilities and identify any potential issues or limitations.

Overfitting and Underfitting

Overfitting and underfitting are common challenges in training AI models. Overfitting occurs when a model learns too much from the training data and fails to generalize to unseen data. It typically happens when the model is too complex or when the training data is limited or noisy. Underfitting occurs when a model is too simple and fails to capture the patterns and relationships in the training data. Balancing the complexity of the model and the availability of training data is crucial to mitigate overfitting and underfitting.

Performance Measures

Performance measures are used to evaluate the performance and accuracy of AI models. Different performance measures are used depending on the nature of the problem and the desired outcomes. Common performance measures include accuracy, precision, recall, F1 score, mean squared error, or area under the receiver operating characteristic curve (ROC AUC). These measures provide insights into the model’s performance, its strengths, and its limitations.

This image is property of www.strategiccontact.com.

Use Cases of AI

Autonomous Vehicles

Autonomous vehicles, also known as self-driving cars, are one of the most prominent applications of AI. AI technologies such as computer vision, sensor fusion, and machine learning enable autonomous vehicles to perceive the environment, make decisions, and navigate safely without human intervention. Autonomous vehicles have the potential to revolutionize transportation, enhancing safety, efficiency, and accessibility.

Fraud Detection

AI plays a crucial role in fraud detection and prevention in various industries, such as banking, insurance, and e-commerce. AI algorithms and models analyze massive amounts of transactional data and detect anomalies or patterns that indicate fraudulent activities. AI-powered fraud detection systems can continuously learn and adapt to new fraud trends and techniques, minimizing financial losses and protecting customers.

Healthcare

AI has the potential to transform healthcare by improving diagnosis, treatment, and patient care. AI models can analyze medical images, such as X-rays or MRIs, to detect diseases or abnormalities with high accuracy. Natural Language Processing techniques can extract relevant information from medical records, research papers, or clinical trials to aid in decision-making and research. AI can also assist in personalized medicine, drug discovery, and remote patient monitoring.

Personalized Recommendations

AI-based personalized recommendation systems are widely used in e-commerce, entertainment, and social media platforms. These systems analyze user behavior, preferences, and historical data to provide personalized suggestions and recommendations. By understanding the user’s interests and preferences, AI can enhance user experience, increase engagement, and improve customer satisfaction.

Virtual Assistants

Virtual assistants, such as Siri, Alexa, or Google Assistant, are AI-powered applications that provide voice-based assistance and perform tasks based on user commands or queries. Natural Language Processing and speech recognition technologies enable virtual assistants to understand and respond to human language in a conversational and contextually meaningful manner. Virtual assistants can assist in tasks such as setting reminders, searching the internet, playing music, or controlling smart home devices.

Ethical Considerations in AI

Bias and Fairness

AI systems are prone to inheriting biases or reflecting biases present in the data used for training. This can result in biased outputs, discriminative decisions, or perpetuation of unfairness. It is essential to ensure that AI models are trained on diverse and representative data and regularly monitored for biases. Ethical considerations such as fairness, transparency, and inclusivity need to be addressed to mitigate biases and ensure fairness in AI applications.

Privacy and Security

AI systems often deal with sensitive and personal data, raising concerns about privacy and security. It is crucial to design AI systems that prioritize data privacy, protection, and secure storage. Regulations and policies, such as data anonymization, encryption, or access control, need to be implemented to safeguard personal information and prevent unauthorized access or misuse of data.

Accountability and Transparency

As AI systems become more autonomous and make important decisions, it is essential to ensure accountability and transparency. AI models should be designed in a way that allows for explanations and justifications of their decisions or predictions. The processes and methods used to train and optimize AI models should be transparent and subject to scrutiny. Establishing clear guidelines and regulation on the ethical use of AI is essential to promote trust and accountability.

In conclusion, AI is a rapidly evolving field that encompasses various technologies, techniques, and applications. Understanding the components of AI, such as data collection, algorithms, and models, is crucial for developing and deploying effective AI systems. Machine Learning, Deep Learning, and Natural Language Processing play significant roles in enabling AI systems to learn, reason, and interact with humans. However, ethical considerations such as bias, privacy, and accountability need to be carefully addressed to ensure the responsible and beneficial use of AI in society. With the right approach, AI has the potential to revolutionize industries, improve decision-making, and enhance the quality of life.