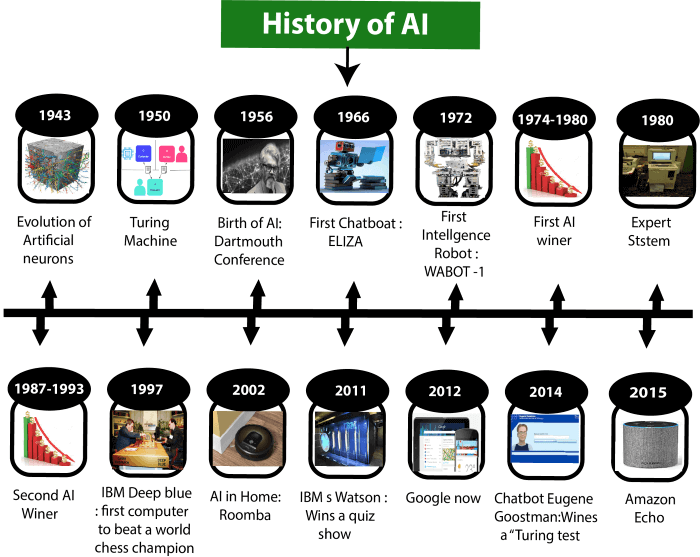

The History Of AI

Imagine a world where machines can learn, reason, and problem-solve, mimicking human intelligence. This captivating article takes you on a fascinating journey through “The History of AI,” exploring the roots, breakthroughs, and remarkable advancements that have shaped the development of Artificial Intelligence. From its humble beginnings to the cutting-edge technologies of today, brace yourself for an enlightening exploration of the incredible progress we’ve made in this extraordinary field.

This image is property of qbi.uq.edu.au.

The beginnings of AI

The birth of AI

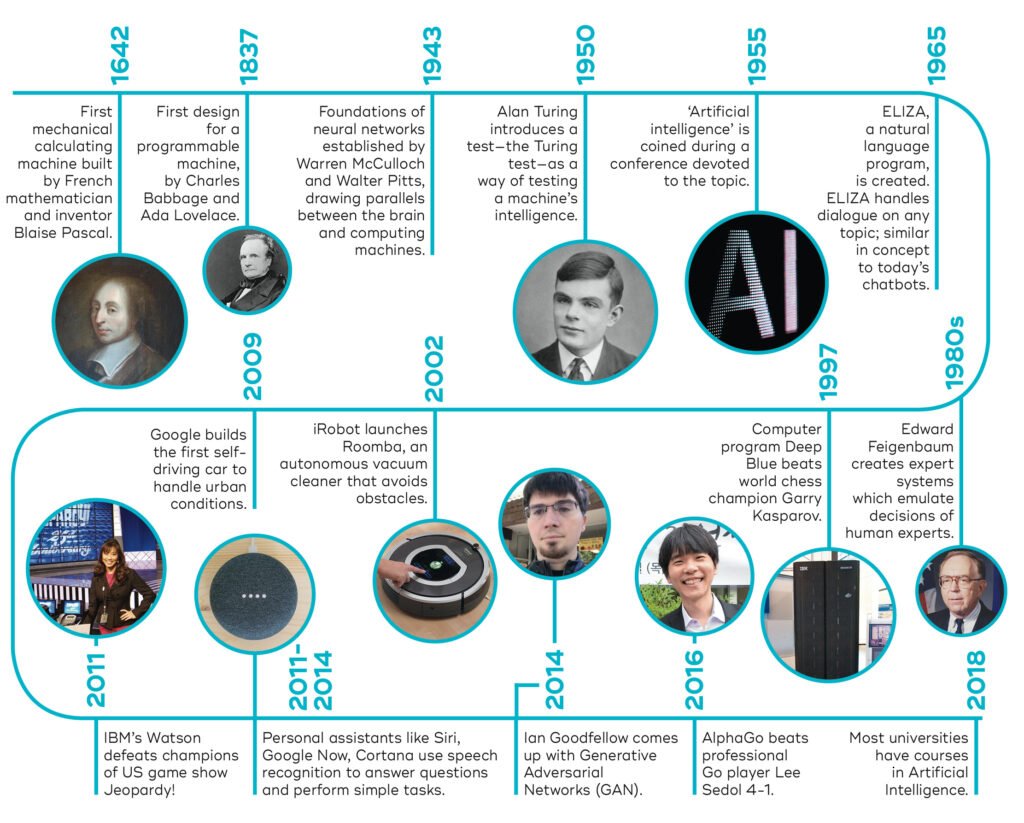

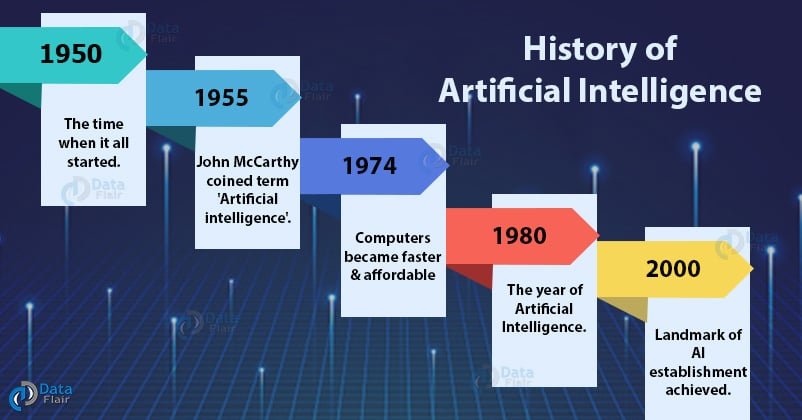

The birth of AI can be traced back to the mid-20th century when researchers began to explore the concept of creating machines capable of mimicking human intelligence. The term “artificial intelligence” itself was coined by John McCarthy in 1956, during the Dartmouth Conference. This conference brought together a group of prominent scientists and mathematicians who shared a common interest in studying and developing intelligent machines.

Early pioneers in AI

During the early days of AI, several pioneers made significant contributions to the field. One of the most influential figures was Alan Turing, who developed the concept of the “Turing machine” and proposed the idea of a machine that could exhibit intelligent behavior. Another pioneer, John McCarthy, is often referred to as the “Father of AI” for his work on the development of the programming language LISP and his efforts in organizing the Dartmouth Conference.

The Dartmouth Conference

The Dartmouth Conference, held in the summer of 1956, is often considered the birthplace of AI. The conference aimed to explore the possibilities of creating machines that could exhibit intelligent behavior. The attendees, including John McCarthy, Marvin Minsky, Allen Newell, and Herbert A. Simon, discussed and debated various aspects of AI research, laying the foundation for future developments in the field.

The emergence of expert systems

Introduction of expert systems

Expert systems emerged as a significant development in the field of AI during the 1970s and 1980s. These systems aimed to replicate the knowledge and decision-making abilities of human experts in specific domains. By encoding the expertise of human professionals into a computer program, expert systems could provide valuable insights and recommendations in areas such as medicine, finance, and engineering.

Prolog and rule-based systems

Prolog, a logic programming language developed in the 1970s, played a crucial role in the development of expert systems. Prolog allowed programmers to define rules and relationships, which formed the basis for rule-based expert systems. These systems utilized a set of predefined rules to generate conclusions or make decisions based on the input provided by the user.

Applications of expert systems

Expert systems found wide-ranging applications in various fields. In medicine, they helped diagnose and suggest treatments for diseases based on symptoms and patient data. In finance, expert systems assisted in analyzing market trends and making investment decisions. Additionally, expert systems were employed in areas like engineering, aerospace, and logistics, where their ability to provide accurate and rapid decision-making capabilities proved invaluable.

Machine learning and neural networks

The development of machine learning

Machine learning became a significant focus within AI research during the 1980s and 1990s. It aimed to develop algorithms and models that could learn from data and improve their performance over time without explicit programming. This shift marked a departure from traditional rule-based systems and introduced a more data-driven approach to AI.

The rise of neural networks

Neural networks, inspired by the structure of the human brain, played a pivotal role in advancing machine learning. Neural networks consist of interconnected artificial neurons that process and transmit information. Through a process called “training,” neural networks can learn patterns and relationships in data, enabling them to perform tasks such as image recognition, natural language processing, and even playing games.

Applications of machine learning and neural networks

The applications of machine learning and neural networks have grown exponentially in recent years. They have been instrumental in image and speech recognition, natural language processing, recommender systems, fraud detection, and autonomous vehicles. Their ability to analyze vast amounts of data and extract meaningful insights has revolutionized industries such as finance, healthcare, marketing, and e-commerce.

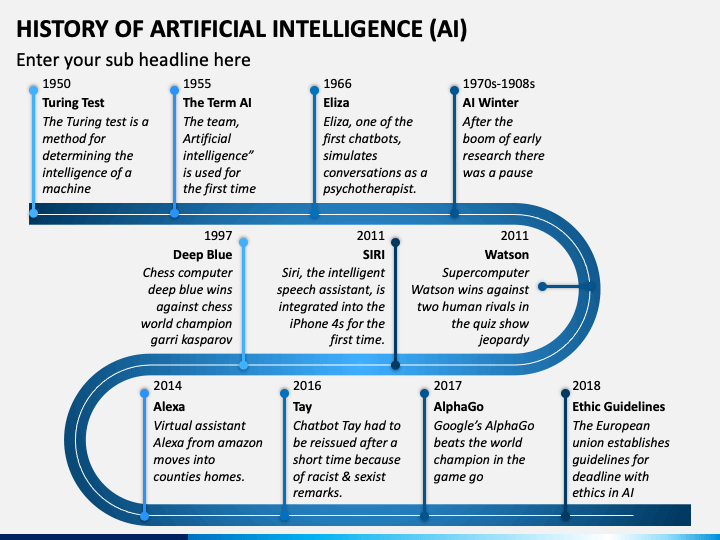

AI winter

The onset of the first AI winter

Despite the rapid progress in AI research, the field experienced a significant setback known as the “AI winter.” The first AI winter occurred in the late 1970s and lasted through the 1980s. Several factors contributed to this decline, including the unfulfilled promises of AI technology, a lack of funding, and the growing complexity of AI algorithms. The disillusionment with AI during this period led to a decrease in public and government support for further research.

Causes and consequences

The AI winter of the 1980s resulted from inflated expectations and a failure to deliver on the ambitious promises of AI technology. The lack of progress in achieving human-level intelligence and the inability of AI systems to perform complex tasks effectively led to skepticism and declining interest in the field. As a result, funding for AI research decreased, and many AI projects were abandoned.

Revival and subsequent AI winters

Following the AI winter, there was a gradual resurgence of interest in AI during the 1990s and early 2000s. This revival was fueled by advancements in machine learning and the increasing availability of computational resources. However, subsequent AI winters occurred when the technology failed to meet expectations or faced significant challenges. Despite these setbacks, AI has continued to make significant strides in recent years, thanks to breakthroughs in deep learning and data-driven approaches.

This image is property of data-flair.training.

The growth of natural language processing

Introduction of natural language processing

Natural language processing (NLP) is a subfield of AI that focuses on enabling computers to understand, interpret, and generate human language. Over the years, NLP has made remarkable progress in improving the accuracy and sophistication of language-based applications.

Achievements and challenges in NLP

NLP has achieved significant milestones in various tasks, such as machine translation, sentiment analysis, chatbots, and speech recognition. However, challenges remain in areas like understanding context, sarcasm, and ambiguity in human communication. The complexity of human language and cultural nuances poses ongoing challenges for NLP researchers.

Recent advancements in NLP

Recent advancements in NLP, particularly through deep learning techniques, have propelled the field forward. Deep learning models, such as transformer networks, have demonstrated impressive language generation and understanding capabilities. This has led to the development of voice assistants, language translation services, and other applications that enhance human-computer interactions.

Computer vision and pattern recognition

The early days of computer vision

Computer vision, the field of AI that focuses on enabling machines to perceive and interpret visual information, has its roots in the 1960s and 1970s. Early research in computer vision aimed to develop algorithms that could recognize simple shapes and objects in images.

Advancements in pattern recognition

Pattern recognition, a key component of computer vision, involves identifying and classifying patterns in visual data. Advancements in machine learning techniques, especially convolutional neural networks (CNNs), have revolutionized pattern recognition. CNNs enable computers to recognize complex patterns and objects in images, leading to applications such as object detection, facial recognition, and autonomous navigation.

Applications of computer vision

Computer vision has found applications in various fields, including healthcare, security, robotics, and autonomous vehicles. In medicine, computer vision assists in diagnosing diseases from medical images, while in security, it helps in surveillance and facial recognition. The automotive industry utilizes computer vision to enable self-driving cars to perceive and interpret the surrounding environment accurately.

This image is property of static.javatpoint.com.

AI in popular culture

AI in movies and literature

AI has long captured the imaginations of filmmakers and authors, resulting in numerous portrayals in movies and literature. From the sentient computer HAL 9000 in “2001: A Space Odyssey” to the thought-provoking AI in “Ex Machina,” these fictional depictions have helped shape public perception and fuelled discussions about the ethical implications of AI.

Ethical concerns raised by AI in popular culture

AI in popular culture often raises ethical concerns, exploring the potential dangers of unchecked AI development. Themes such as AI surpassing human intelligence, loss of control, and the moral responsibility of AI systems have been recurring motifs. These depictions serve as cautionary tales and encourage thoughtful consideration of the impact of AI on society.

Effect of popular culture on public perception of AI

Popular culture plays a significant role in shaping public perceptions of AI. Media portrayals often contribute to a sense of excitement, fear, or skepticism about AI technology. It is important to balance these fictional portrayals with accurate and comprehensive information to ensure a well-informed public discourse on AI and its potential benefits and risks.

The era of big data and AI

The synergy between big data and AI

The advent of big data, characterized by the availability of vast amounts of structured and unstructured data, has fueled the growth of AI. AI algorithms thrive on large datasets, enabling them to identify patterns, make predictions, and extract valuable insights.

Data-driven approaches in AI

Data-driven approaches, powered by AI, have revolutionized industries such as finance, marketing, healthcare, and customer service. Predictive analytics, recommendation systems, personalized marketing campaigns, and fraud detection are just a few examples of the applications where AI leverages big data to deliver tangible business value.

Impact of AI on big data analytics

AI has transformed the field of big data analytics, enabling organizations to derive meaningful insights from complex and diverse datasets. By automating data analysis and leveraging advanced algorithms, AI enables faster decision-making, improved operational efficiency, and more accurate predictions. With AI’s ability to handle and process huge volumes of data, the potential for unlocking valuable insights is unprecedented.

This image is property of cdn.sketchbubble.com.

Recent breakthroughs in AI

Deep learning and AlphaGo

Deep learning, a subfield of machine learning, has witnessed remarkable breakthroughs in recent years. Neural networks with multiple layers, known as deep neural networks, have displayed exceptional performance in various AI tasks. One notable achievement was when Google DeepMind’s AlphaGo defeated the world champion Go player, highlighting the power of deep learning in complex decision-making scenarios.

Autonomous vehicles and robotics

AI has revolutionized the development of autonomous vehicles and robotics. Self-driving cars, a prime example, leverage AI technologies such as computer vision, machine learning, and sensor fusion to perceive the environment and make real-time decisions. In robotics, AI enables the creation of intelligent machines that can perform complex tasks autonomously, ranging from industrial automation to healthcare assistance.

AI in healthcare

AI holds immense potential in transforming healthcare by improving diagnostics, patient care, and drug discovery. Machine learning algorithms can analyze medical images, detect diseases, and assist doctors in making accurate diagnoses. Natural language processing helps extract relevant information from medical records, enabling faster and more precise data-driven healthcare solutions. Additionally, AI is revolutionizing drug discovery by screening vast databases of compounds, accelerating the development of new therapies.

Future prospects and ethical considerations

The potential of AI in various fields

The potential applications of AI are vast and can revolutionize various fields, including transportation, finance, education, and agriculture. Self-driving cars could enhance road safety and reduce traffic congestion, while AI-powered financial systems could provide personalized investment advice. In education, AI can enable individualized learning experiences, and in agriculture, it can optimize crop yield and resource allocation.

Ethical considerations in AI development

As AI continues to advance, ethical considerations become increasingly important. Issues such as bias in algorithms, privacy concerns, job displacement, and autonomous weapon systems need careful deliberation and regulation. It is crucial to develop AI in a manner that promotes transparency, accountability, and fairness, ensuring that it benefits humanity as a whole.

Human-AI collaboration and augmentation

The future of AI lies in human-AI collaboration and augmentation, rather than a replacement of human capabilities. AI can complement human skills by automating repetitive tasks, providing personalized recommendations, and assisting in decision-making. Collaboration between humans and AI systems can lead to enhanced productivity, creativity, and problem-solving abilities, ultimately creating a more efficient and prosperous society.

In conclusion, the history of AI has seen significant milestones, from its birth and early pioneers to the emergence of expert systems, machine learning, natural language processing, computer vision, and its portrayal in popular culture. Despite the setbacks of AI winters, recent breakthroughs and the synergy with big data have propelled AI into new frontiers. As we look towards the future, the potential of AI in various fields is immense, but ethical considerations and human-AI collaboration must guide its development towards creating a better world.