The Ethical Implications Of AI: What Are The Ethical Considerations When Developing And Deploying AI Systems?

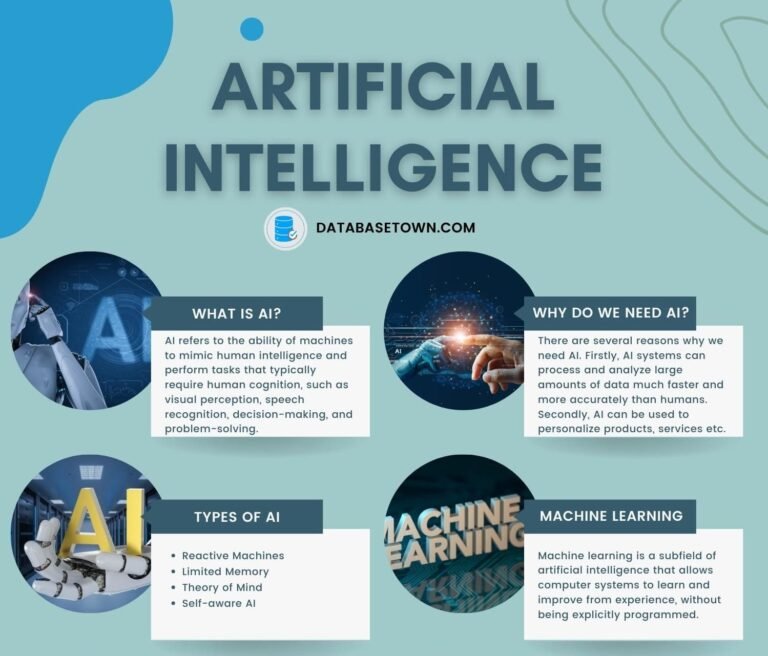

In a rapidly advancing world, the integration of Artificial Intelligence (AI) has become more prevalent than ever before. However, with such advancements come ethical implications that can no longer be ignored. From bias and privacy concerns to job displacement and accountability, there are countless ethical considerations to take into account when developing and deploying AI systems. With these concerns in mind, it is crucial to explore the various angles and implications of AI to ensure that these systems are developed and utilized in an ethical and responsible manner.

The Impact of AI on Privacy and Security

Data privacy and protection

AI technology has revolutionized the way we collect, analyze, and utilize data. While this has brought numerous benefits, it has also raised concerns about data privacy and protection. With AI systems constantly collecting and processing vast amounts of personal information, there is a risk of this data being misused or falling into the wrong hands. It is crucial to establish robust measures and regulations to ensure the privacy and security of individuals’ data in the age of AI.

Potential for surveillance and data misuse

The widespread adoption of AI technology has led to an increase in surveillance capabilities. AI-powered cameras, facial recognition systems, and predictive algorithms have raised concerns about the potential for widespread and invasive surveillance. It is essential to strike a balance between utilizing AI systems for security purposes and respecting individuals’ rights to privacy. Proper oversight and regulations must be in place to prevent any misuse of AI technology for surveillance purposes.

Algorithmic bias and discrimination

One of the key ethical considerations in AI systems is the potential for algorithmic bias and discrimination. AI algorithms are trained on large datasets, and if these datasets contain biased or discriminatory information, the algorithms may replicate and amplify those biases. This can lead to unfair outcomes, perpetuating existing social inequalities and discrimination. It is imperative to address this issue by ensuring that AI systems are trained on diverse and unbiased datasets and implementing mechanisms to detect and correct algorithmic bias.

Cybersecurity risks

As AI systems become increasingly integrated into various sectors, the risk of cybersecurity threats also grows. AI algorithms can be vulnerable to attacks and manipulation, leading to the compromise of sensitive data or the disruption of critical systems. It is crucial to prioritize cybersecurity measures when developing and deploying AI systems to protect against malicious actors and ensure the integrity and security of AI technologies.

Transparency and Explainability in AI Systems

Importance of transparency in AI decision-making

Transparency plays a crucial role in building trust and accountability in AI systems. Individuals impacted by AI decisions should have access to information about the factors considered and the reasoning behind those decisions. Transparent AI systems enable users to challenge or question outcomes, fostering a sense of fairness and promoting responsible use of AI technology.

Challenges in understanding black-box algorithms

One significant challenge in achieving transparency is the use of black-box algorithms, where the decision-making process is obscured or difficult to understand. These algorithms, such as deep learning neural networks, can make highly complex and opaque decisions. It is important to develop strategies and techniques to enhance the explainability of AI systems, allowing users to understand the reasoning behind the decisions made by these algorithms.

Bias detection and mitigation

Transparency is closely linked to the detection and mitigation of algorithmic bias. By providing transparency into the decision-making process, it becomes easier to identify and address biases that may arise in AI systems. Techniques such as bias detection algorithms, fairness metrics, and post-processing steps can help in minimizing biases and promoting fair and equitable outcomes.

Responsibility for explaining AI decisions

Determining who bears the responsibility for explaining AI decisions is a critical consideration. Should the burden of explanation fall on the AI system’s developers, the organizations deploying the AI systems, or the AI systems themselves? Clear guidelines and accountability frameworks need to be established to distribute responsibility appropriately and ensure that AI decisions are adequately explained to affected individuals.

Accountability and Responsibility for AI Actions

Assigning responsibility in autonomous AI systems

As AI systems become increasingly autonomous, it becomes essential to determine who is accountable for the actions and outcomes of these systems. If an autonomous AI system makes a decision with unintended negative consequences, establishing responsibility can be challenging. Proper governance frameworks and regulations should be put in place to clarify accountability and ensure that actors responsible for designing, deploying, or overseeing AI systems are held accountable for their actions.

Legal and ethical frameworks for accountability

Legal and ethical frameworks play a crucial role in establishing accountability for AI actions. Ethical guidelines and codes of conduct can provide a foundation for responsible use of AI technology. Additionally, legal frameworks need to adapt to address challenges presented by AI systems, ensuring that individuals’ rights are protected and those responsible for AI actions can be held accountable under the law.

Implications of AI system errors or failures

The implications of AI system errors or failures can be significant, ranging from financial losses to threats to human safety. It is crucial to implement mechanisms that detect and address errors and failures promptly. Regular monitoring, testing, and auditing of AI systems can help identify potential issues and mitigate risks before they escalate.

Preventing AI from being used for unethical purposes

As with any technology, there is a risk of AI being put to unethical uses. Whether it is for surveillance, discrimination, or manipulation, precautions must be taken to prevent the misuse of AI technology. This includes implementing robust ethical guidelines, establishing clear boundaries, and empowering regulatory bodies to ensure compliance with ethical standards.

Social and Economic Impact of AI

Displacement of jobs and income inequality

The rise of AI technology has led to concerns about job displacement and income inequality. As AI systems become more capable of performing tasks traditionally done by humans, some jobs may become obsolete, leading to job losses and economic disruption. It is essential to address these concerns by implementing measures such as retraining programs and social safety nets to support individuals affected by automation and mitigate potential economic disparities.

Bias in AI job hiring and evaluation processes

AI systems are increasingly being used in job hiring and evaluation processes, but there is a risk of bias in these systems. If the training data used to develop AI algorithms reflects existing biases, such as gender or racial biases, the AI systems may perpetuate these biases in the decision-making process. Efforts should be made to ensure that AI systems used in job hiring and evaluation are fair, unbiased, and capable of promoting diversity and inclusivity.

Ethical considerations in autonomous vehicles

The development of autonomous vehicles raises ethical considerations regarding safety, decision-making, and liability. AI algorithms in self-driving cars must make split-second decisions that can impact human lives. Questions such as who an autonomous vehicle should prioritize in an accident scenario or how liability should be determined need to be addressed to ensure the safe and ethical deployment of autonomous vehicles.

Social biases and reinforcement

AI systems can also inadvertently reinforce existing social biases and inequalities. If trained on biased data or operated in a biased environment, AI systems may perpetuate discriminatory practices and reinforce social biases. It is essential to continuously monitor and address social biases in AI systems to prevent further exacerbation of existing inequalities.

Human Rights and AI

Potential violations of privacy rights

The increased use of AI technology can potentially infringe upon individuals’ privacy rights. AI systems that collect and analyze vast amounts of personal data may encroach upon individuals’ rights to privacy and autonomy. Safeguards must be put in place to ensure that individuals’ rights are respected, and that AI systems are deployed in a manner consistent with privacy laws and regulations.

Right to explanation and fair treatment

In the context of AI decision-making, individuals have the right to both an explanation of how AI systems arrived at a specific decision and fair treatment. AI algorithms should be transparent enough to allow individuals to understand the rationale behind a decision affecting their lives, enabling them to challenge or question the decision if necessary.

Discrimination and inequality

AI systems have the potential to perpetuate discrimination and inequality if not properly designed and monitored. Ensuring that AI systems are fair, unbiased, and capable of addressing existing social inequalities is crucial for promoting equality and preventing discriminatory practices.

AI in military and conflict

The deployment of AI systems in the context of military and conflict raises significant ethical considerations. The development of autonomous weapons capable of making life or death decisions without human intervention poses risks to human rights and international law. Establishing clear guidelines and regulations for the ethical use of AI in military contexts is crucial to prevent unnecessary harm and ensure compliance with international humanitarian laws.

Ethics in AI Research and Development

Balancing scientific progress and ethical considerations

AI research and development involve a delicate balance between scientific progress and ethical considerations. While advancements in AI technology can bring immense benefits, it is important to ensure that these advancements are made in an ethical manner, considering their potential impacts on individuals and society as a whole.

Ethical guidelines for AI researchers

AI researchers should adhere to ethical guidelines and codes of conduct to ensure responsible research practices. These guidelines may include considerations for data collection and use, transparency, fairness, and accountability. By following these guidelines, researchers can contribute to the development and deployment of AI systems that align with ethical principles.

Human subject protection and consent

AI research often involves the use of human subjects for data collection or testing. It is essential to prioritize the protection of human subjects and obtain informed consent, ensuring that individuals understand the nature and potential risks of their participation in AI research. Safeguarding the rights and well-being of human subjects should always be a top priority in AI research and development.

Safeguarding against unintended consequences

Unintended consequences are a common concern in AI research and development. Developing robust mechanisms to identify and mitigate potential risks is crucial to prevent unwanted outcomes. Regular testing, validation, and risk assessment can help identify possible unintended consequences and allow for the implementation of appropriate safeguards.

Implications of AI on Human Autonomy and Agency

AI influence on decision-making processes

AI systems have the potential to influence human decision-making processes. Whether it is through personalized recommendations, targeted advertising, or algorithmic decision-making, AI technology can shape the choices individuals make. It is important to reflect on the extent of AI’s influence and ensure that individuals’ autonomy and agency are respected and preserved.

Manipulation and control through AI systems

There is a risk of manipulation and control through AI systems, especially in the context of personalized content and social media algorithms. By tailoring content and recommendations based on individual preferences and behaviors, AI systems can shape users’ perspectives and behaviors. It is crucial to establish ethical guidelines to prevent undue manipulation and ensure that individuals have control over their own choices.

Preserving human values and freedom of choice

As AI systems become more integrated into various aspects of our lives, it is essential to preserve human values and freedom of choice. AI technologies should be designed and utilized in a manner that aligns with human values, promoting individual autonomy and respecting diverse perspectives. Balancing the benefits of AI with the preservation of human values is essential for responsible AI development and deployment.

Unintended consequences of AI interventions

Introducing AI interventions can have unintended consequences that may impact individuals and society. It is crucial to carefully consider the potential risks and unintended outcomes of AI interventions before their deployment. Rigorous impact assessments and ongoing monitoring can help mitigate these risks and ensure that AI interventions do not cause harm or unintended consequences.

Fairness and Bias in AI Systems

Addressing bias in training data

Training data plays a crucial role in the performance and fairness of AI systems. If training data contains biases, such as gender or racial biases, the AI systems may replicate and perpetuate these biases. Efforts should be made to address bias in training data, including the use of diverse and representative datasets and implementing techniques for bias detection and mitigation.

Algorithmic fairness and discrimination

Achieving algorithmic fairness is an ongoing challenge in AI development. AI systems should make decisions and predictions without unjustifiably favoring or discriminating against individuals or groups based on factors such as race, gender, or religion. Fairness metrics, bias detection algorithms, and continuous monitoring are essential tools to ensure algorithmic fairness and prevent discriminatory practices.

Diversity and inclusivity in AI development

Ensuring diversity and inclusivity in AI development teams is crucial for addressing biases and creating AI systems that are fair and unbiased. By incorporating diverse perspectives and lived experiences, AI systems are less likely to perpetuate existing social biases and more likely to promote equitable outcomes. Encouraging diversity in the field of AI development is a necessary step towards creating fair and inclusive AI systems.

Auditing and monitoring AI systems for bias

Regular auditing and monitoring of AI systems are essential to detect and correct biases. Bias detection algorithms and continuous evaluation can help identify and address any biases that may emerge or persist in AI systems. By establishing robust auditing and monitoring practices, developers can ensure that AI systems remain fair, transparent, and accountable.

Ethical Considerations in AI Governance

Regulation and policy-making for AI

The rapid advancement of AI technology necessitates the establishment of clear regulations and policies. Regulatory frameworks should address ethical considerations and ensure that AI development and deployment are conducted in a responsible and accountable manner. By implementing appropriate regulations, governments can strike a balance between promoting innovation and safeguarding the interests of individuals and society.

Ethics boards and committees

Establishing ethics boards and committees can provide guidance and oversight in AI governance. These bodies, composed of experts from various fields, can contribute to the development of ethical guidelines, assess the ethical implications of AI systems, and provide recommendations for responsible use. The involvement of diverse perspectives in AI governance is vital for ensuring ethical decision-making.

International cooperation and standards

AI governance requires international cooperation and the development of common standards. Collaborative efforts can ensure consistency in ethical guidelines, promote responsible AI development globally, and prevent a fragmented ethical landscape. By working together, countries can create a unified approach to addressing the ethical implications of AI and foster trust and collaboration in the field.

Balancing innovation and responsible use

As AI technology continues to advance, striking a balance between innovation and responsible use becomes paramount. It is essential to encourage innovation while ensuring that it aligns with ethical principles and serves the best interests of individuals and society. By fostering a culture of responsible use, developers and organizations can harness the full potential of AI technology while avoiding or mitigating potential harm.

Long-term Implications and Existential Risks of AI

AI superintelligence and control

The development of AI superintelligence poses significant long-term implications and existential risks. Superintelligent AI systems could surpass human intelligence and potentially gain control over critical decision-making processes. Ensuring the safety and alignment of superintelligent AI systems with human values becomes crucial to prevent unintended consequences and ensure the preservation of human well-being.

Unpredictability and emergence of unintended behaviors

AI systems, particularly those driven by machine learning algorithms, can exhibit unpredictable behavior and emergent properties. As AI systems become more complex and capable, there is a risk of unintended behaviors or outcomes that were not anticipated in the development process. Continuous monitoring, testing, and rigorous safety measures are necessary to mitigate these risks and prevent any potential harm.

Long-term social, economic, and environmental impacts

The long-term impacts of AI extend beyond technological advancements. AI has the potential to shape society, economies, and the environment. These impacts can be both positive and negative, and it is crucial to carefully consider their long-term consequences. Responsible AI development should prioritize the well-being of individuals and the sustainability of the environment to ensure a positive and equitable future.

Mitigating potential catastrophic consequences

To mitigate potential catastrophic consequences of AI, proactive measures need to be taken. Establishing comprehensive safety protocols, conducting rigorous risk assessments, and implementing safeguards are necessary steps to prevent AI systems from causing harm. Additionally, maintaining open and transparent dialogues among researchers, policymakers, and the public can help identify and address potential risks in a timely manner.

In conclusion, the ethical implications of AI are multifaceted and require careful consideration at every stage of development and deployment. From privacy and security concerns to biases, accountability, and long-term risks, addressing these ethical considerations is vital to ensure the responsible and ethical use of AI technology. Through transparency, explainability, fairness, and a commitment to safeguarding human rights, we can harness the potential of AI while upholding ethical principles and promoting a more inclusive and equitable future.

Want to write articles like us? Get your copy of AI WiseMind here!