How To Use Natural Language Processing To Extract Insights From Text Data

In this article, you will discover how to effectively utilize natural language processing (NLP) to extract valuable insights from the vast amount of textual data available. By understanding the intricacies of NLP, you can uncover hidden patterns, sentiments, and meanings within written content. Join us as we explore the power of NLP in unlocking the potential of text data for improved decision-making and deeper understanding.

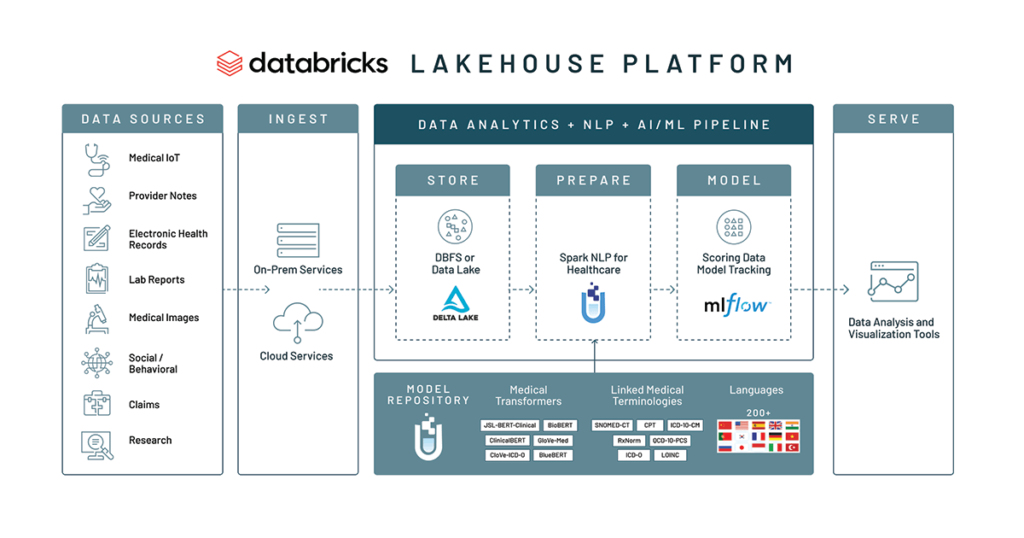

This image is property of www.databricks.com.

Understanding Natural Language Processing

What is Natural Language Processing?

Natural Language Processing (NLP) is a branch of artificial intelligence (AI) that focuses on the interaction between computers and human language. It involves the ability of computers to understand, interpret, and generate human language in a way that is meaningful and useful. NLP enables machines to read text, listen to speech, and derive meaning from it, enabling a wide range of applications such as chatbots, language translation, sentiment analysis, and more.

Why is Natural Language Processing important?

Natural Language Processing is important because it bridges the gap between humans and machines by enabling computers to understand and process human language. This has significant implications in various fields such as customer service, healthcare, finance, and marketing, where large amounts of text data are generated daily. NLP allows organizations to analyze and extract valuable insights from this data, enabling them to make informed decisions, automate processes, improve customer experiences, and gain a competitive edge in the market.

The process of Natural Language Processing

The process of Natural Language Processing involves several steps, starting with preparing the text data and progressing towards building models and making predictions. The key steps in the NLP process include cleaning and preprocessing the text data, tokenization to break the text into smaller units, stop word removal, stemming and lemmatization to reduce words to their base form, exploratory data analysis to understand the data, feature extraction and selection, building a classification model, and evaluating its performance. Additionally, techniques such as text representation, sentiment analysis, named entity recognition, topic modeling, and text summarization play vital roles in the overall NLP process.

Preparing Text Data for NLP

Cleaning and preprocessing the text data

Before feeding the text data into an NLP pipeline, it is important to clean and preprocess it. This involves removing any irrelevant or unnecessary information such as punctuation, numbers, and special characters. It may also involve converting the text to lowercase, removing extra whitespaces, and handling any encoding issues. Additionally, text normalization techniques such as removing stopwords and applying stemming or lemmatization can help standardize the text and improve the efficiency of downstream NLP tasks.

Tokenization

Tokenization is the process of breaking down the text into individual units called tokens. These tokens can be sentences, words, or even characters, depending on the specific requirements of the NLP task. Tokenization is essential as it provides the basis for further analysis and allows the computer to understand the structure of the text. There are various tokenization techniques available, such as whitespace tokenization, rule-based tokenization, and statistical tokenization. Choosing the right technique depends on the language, domain, and specific requirements of the NLP project.

Stop word removal

Stop words are commonly used words that do not carry significant meaning in a given language. Examples of stop words include “a,” “the,” “is,” “and,” etc. These words can be safely removed from the text data as they do not contribute much to the overall analysis and may even introduce noise into the results. By removing stop words, the NLP system can focus on the more important and informative words in the text, leading to better accuracy and efficiency in subsequent tasks such as information retrieval, sentiment analysis, and topic modeling.

Stemming and Lemmatization

Stemming and lemmatization are techniques used to reduce words to their base form. Stemming involves removing suffixes from words to obtain their root form, while lemmatization involves reducing words to their dictionary form (lemma). Both techniques help to simplify the text data and reduce the dimensionality of the feature space, making it easier for NLP algorithms to process. The choice between stemming and lemmatization depends on the specific task and the requirements of the project. Stemming is generally faster but may produce non-linguistic words, while lemmatization is slower but produces more accurate results by preserving the actual meaning of the words.

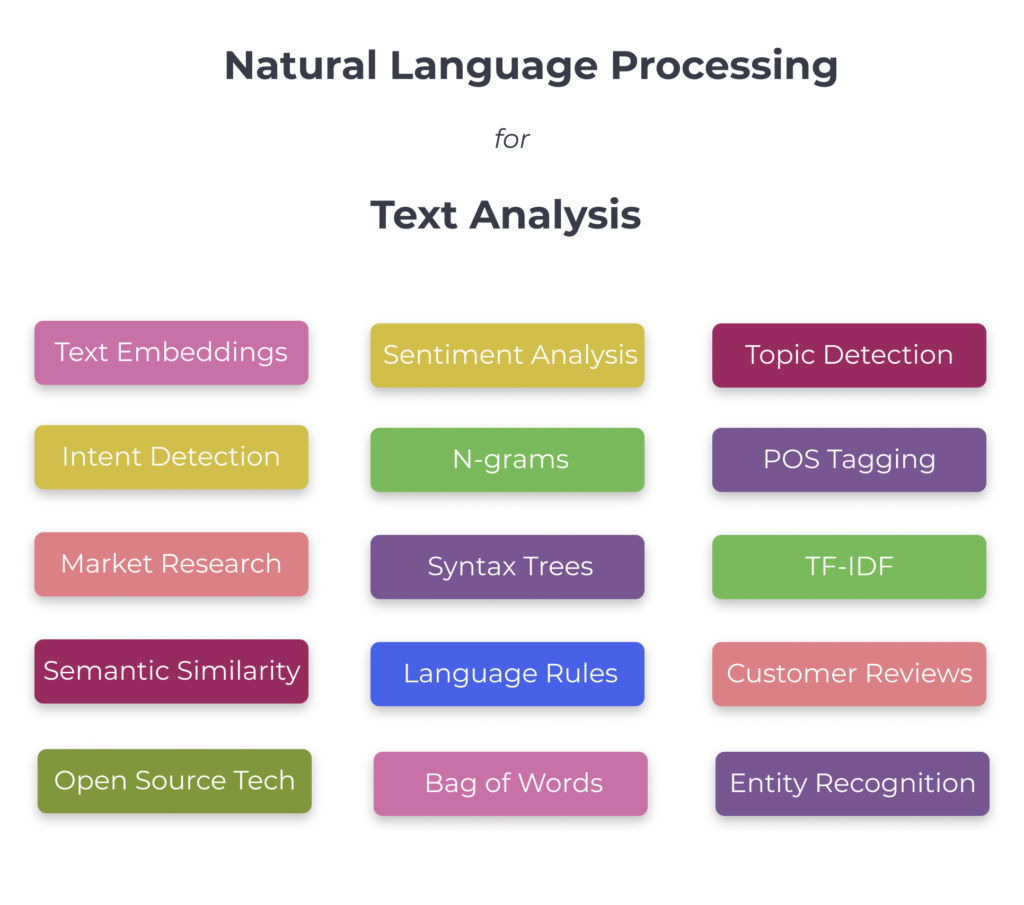

This image is property of uploads-ssl.webflow.com.

Building a Natural Language Processing Pipeline

Exploratory data analysis

Before diving into building an NLP model, it is crucial to perform exploratory data analysis (EDA) to gain insights into the data. This involves understanding the structure, distribution, and patterns in the text data. EDA helps to uncover any anomalies or issues in the data, identify key features, and make informed decisions about data preprocessing and modeling strategies. Techniques such as word frequency analysis, visualization, and statistical measures aid in the exploratory analysis of text data.

Feature extraction

Feature extraction involves converting raw text data into a numerical representation that can be used by machine learning algorithms. The aim is to capture the relevant information and patterns from the text in a format that is suitable for analysis. Common feature extraction techniques include bag-of-words, TF-IDF, word embeddings (such as Word2Vec and GloVe), and topic modeling approaches. Each technique has its own advantages and applicability, and the choice depends on the nature and purpose of the NLP task.

Feature selection

Feature selection is the process of choosing the most informative and relevant features from the extracted set of features. This helps in reducing the dimensionality of the data, improving model performance, and reducing computational costs. Techniques such as chi-squared test, mutual information, and recursive feature elimination can be used for feature selection. By selecting the right set of features, the NLP model can focus on the most influential aspects of the text and improve the accuracy and efficiency of predictions.

Building a classification model

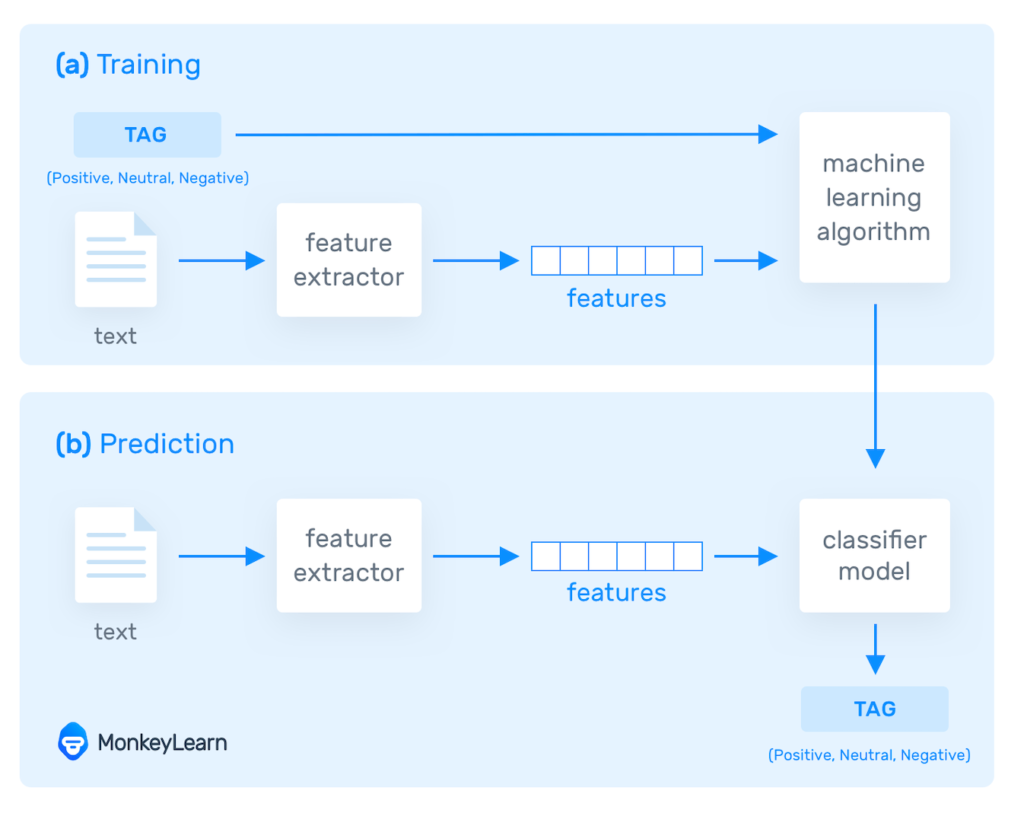

Once the text data has been preprocessed, feature extraction has been performed, and the relevant features have been selected, it is time to build a classification model. Classification models are used to predict the category or class of a given text based on its features. Various supervised learning algorithms, such as logistic regression, decision trees, naive Bayes, support vector machines, and deep learning models, can be used for text classification. The choice of the model depends on the size of the dataset, the complexity of the problem, and the available computational resources.

Text Representation Techniques

Bag-of-Words (BoW) approach

The bag-of-words (BoW) approach is a commonly used technique for representing text data in NLP. In this approach, text is represented as a “bag” of its individual words, without considering the order or structure of the text. The occurrence or frequency of each word in the text is counted, and this count is used as a feature for further analysis. While the BoW approach is simple and easy to implement, it ignores important contextual information and may result in a high-dimensional and sparse representation of the text.

TF-IDF (Term Frequency-Inverse Document Frequency)

TF-IDF is a text representation technique that aims to overcome some of the limitations of the BoW approach. It takes into account both the frequency of occurrence of a word in a document (term frequency) and the rarity of the word across all documents (inverse document frequency). TF-IDF assigns higher weights to words that are important in a specific document but less common in other documents, helping to capture the uniqueness and relevance of words. TF-IDF representation is widely used in information retrieval, document classification, and text clustering tasks.

Word embeddings

Word embeddings are dense vector representations of words in a continuous vector space, where similar words are closer to each other. These vector representations are learned from large amounts of text using techniques such as Word2Vec and GloVe. Word embeddings capture the semantic and syntactic relationships between words, enabling better understanding and analysis of text data. They are particularly useful for tasks such as sentiment analysis, named entity recognition, and language translation.

Topic modeling

Topic modeling is a text analysis technique that uncovers latent topics or themes present in a collection of documents. It helps to summarize and organize large volumes of text data by identifying the underlying patterns and relationships between words. Techniques such as Latent Dirichlet Allocation (LDA) and Latent Semantic Analysis (LSA) are commonly used for topic modeling. Topic modeling can be used for tasks such as document clustering, information retrieval, and content recommendation.

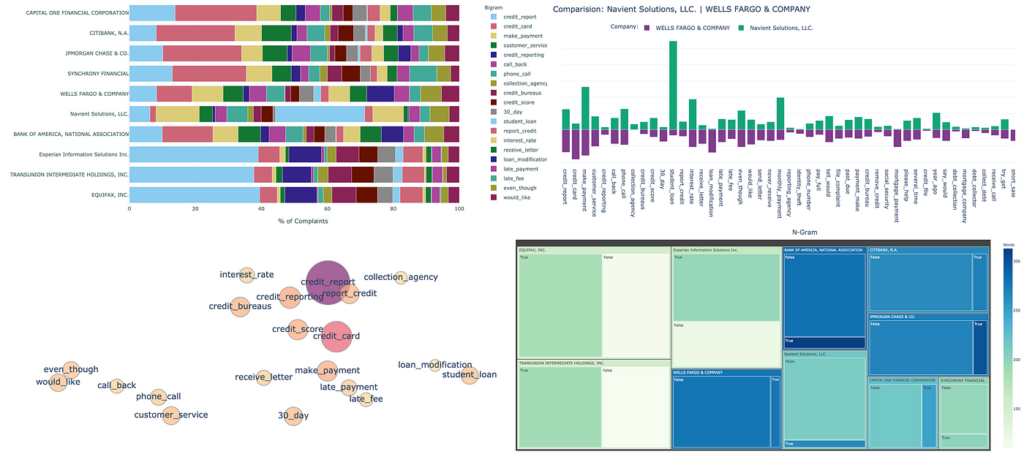

This image is property of miro.medium.com.

Text Classification

Supervised learning algorithms for text classification

Text classification is the task of assigning predefined categories or classes to a given text document. Various supervised learning algorithms can be used for text classification, depending on the size and complexity of the dataset. Logistic regression, decision trees, random forests, support vector machines, and deep learning models such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs) are commonly employed for text classification. Each algorithm has its own strengths and weaknesses, and the choice depends on factors such as the nature of the data, available computational resources, and the desired level of interpretability.

Evaluating text classification models

The performance of text classification models needs to be evaluated to determine their effectiveness. Common evaluation metrics include accuracy, precision, recall, F1 score, and area under the receiver operating characteristic curve (AUC-ROC). Additionally, techniques such as cross-validation, stratified sampling, and confusion matrix analysis can be used to assess the model’s performance and identify areas of improvement. It is essential to choose appropriate evaluation metrics based on the specific requirements of the NLP task and consider factors such as class imbalance and misclassification costs.

Improving text classification performance

To improve the performance of text classification models, several techniques can be employed. These include increasing the size of the training data, performing feature engineering to extract more informative features, using ensemble methods such as bagging or boosting, hyperparameter tuning, and applying advanced techniques such as deep learning or transfer learning. Additionally, addressing issues such as class imbalance, noisy or sparse data, and feature selection can help to enhance the accuracy and generalization capabilities of the model.

Sentiment Analysis

What is sentiment analysis?

Sentiment analysis, also known as opinion mining, is a technique used to determine the sentiment or emotion expressed in a given text document. It involves extracting subjective information from the text and categorizing it into positive, negative, or neutral sentiment. Sentiment analysis is commonly used in social media monitoring, brand reputation management, market research, and customer feedback analysis. It helps organizations understand public opinion, customer satisfaction, and emerging trends, allowing them to make data-driven decisions and enhance their products or services.

Sentiment analysis techniques

There are various techniques available for sentiment analysis, ranging from rule-based approaches to machine learning models. Rule-based techniques involve defining a set of predefined rules or dictionaries to identify sentiment-bearing words and phrases. Machine learning techniques, on the other hand, involve training a model on labeled data to predict the sentiment of unseen text. Supervised learning algorithms such as support vector machines (SVM), naive Bayes, and deep learning models like recurrent neural networks (RNNs) and transformers (BERT) are often used for sentiment analysis. Hybrid approaches that combine rule-based and machine learning methods are also common, enabling more accurate and robust sentiment analysis.

Applications of sentiment analysis

Sentiment analysis has a wide range of applications across various industries. In marketing and customer service, sentiment analysis helps to monitor brand sentiment, analyze customer feedback, and identify emerging trends or issues. In finance, sentiment analysis can be used to gauge investor sentiment and predict market movements. In healthcare, sentiment analysis can analyze patient feedback to evaluate the quality of care. In social media analysis, sentiment analysis can measure public opinion towards a particular event, product, or political issue. Overall, sentiment analysis provides valuable insights from large volumes of text data, enabling organizations to make informed decisions and take appropriate actions.

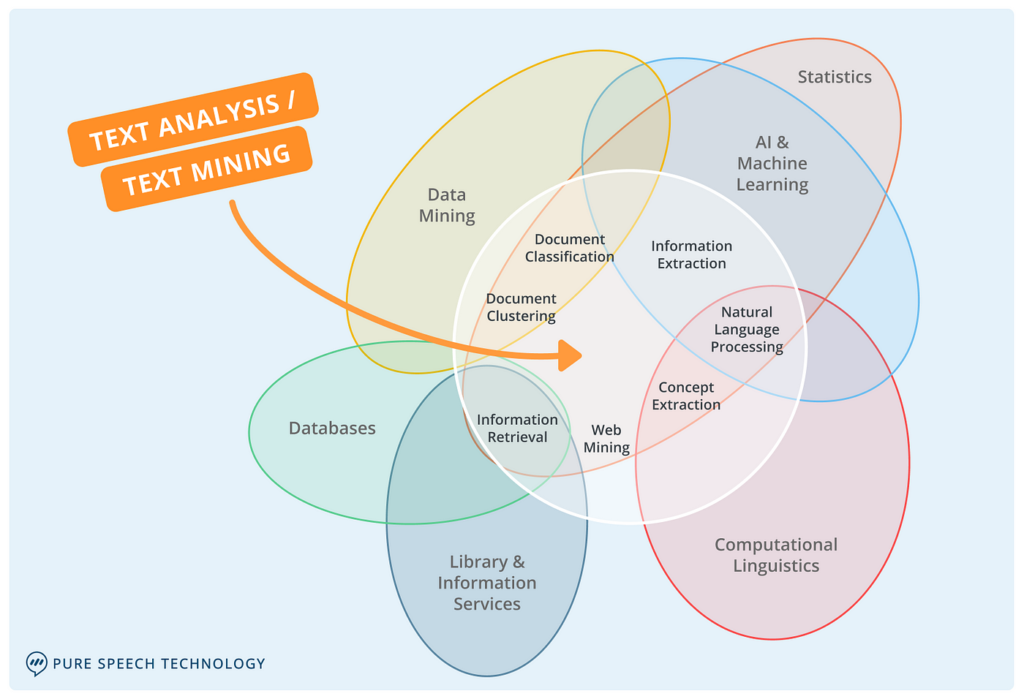

This image is property of miro.medium.com.

Named Entity Recognition

Definition of Named Entity Recognition

Named Entity Recognition (NER) is a subtask of NLP that focuses on identifying and classifying named entities in text documents. Named entities are specific words or phrases that refer to real-world entities such as people, organizations, locations, dates, and more. NER helps in extracting structured information from unstructured text, enabling tasks such as information extraction, question answering, and knowledge graph construction. Accurate NER is essential for tasks such as entity disambiguation, relationship extraction, and text understanding.

Methods for Named Entity Recognition

There are various methods for Named Entity Recognition, ranging from rule-based approaches to machine learning models. Rule-based methods involve defining patterns or regular expressions to detect named entities based on their syntactic or morphological properties. Machine learning methods, on the other hand, involve training a model on labeled data to predict the presence and type of named entities in unseen text. Popular machine learning approaches for NER include conditional random fields (CRF), support vector machines (SVM), and deep learning models such as recurrent neural networks (RNNs) and transformers (BERT). Hybrid approaches that combine rule-based and machine learning methods are also common, enabling more accurate and scalable NER.

Applications of Named Entity Recognition

Named Entity Recognition has various applications across different domains. In information extraction, NER helps to identify key entities and facts from unstructured documents and populate structured databases. In question answering, NER aids in understanding the context of the question and identifying relevant entities for generating accurate answers. In recommendation systems, NER extracts entities such as product names, brands, and user preferences to improve personalized recommendations. In healthcare, NER can be used to extract medical entities such as disease names, drug names, and dosage information from clinical records. Overall, NER plays a crucial role in unlocking valuable information from text data and enabling advanced NLP applications.

Topic Modeling

What is topic modeling?

Topic modeling is a technique used to uncover latent topics or themes present in a collection of documents. It allows the exploration and organization of large volumes of text data by identifying the underlying patterns and relationships between words. Topic modeling provides a way to summarize and understand the content of a document collection without having to read each document individually. By grouping documents into topics, topic modeling enables efficient information retrieval, content recommendation, and data exploration.

Latent Dirichlet Allocation (LDA)

Latent Dirichlet Allocation (LDA) is one of the most widely used algorithms for topic modeling. LDA assumes that each document in a collection is a mixture of multiple topics, and each word in the document is generated from one of the topics. The goal of LDA is to uncover the latent topics by estimating the distribution of topics in each document and the distribution of words in each topic. LDA can be used to analyze text data, identify recurring themes, discover hidden patterns, and generate topic-based summaries or recommendations.

Latent Semantic Analysis (LSA)

Latent Semantic Analysis (LSA) is another popular technique for topic modeling. LSA works by creating a matrix representation of the document collection, where each row corresponds to a document and each column represents a word. The matrix is then factorized using singular value decomposition (SVD) to extract the underlying topics. LSA captures the semantic relationships between words and documents and projects them onto a lower-dimensional space, enabling topic extraction and similarity analysis. LSA is useful for tasks such as document clustering, query expansion, and information retrieval.

Applications of topic modeling

Topic modeling has applications in various fields, including information retrieval, content recommendation, social media analysis, and market research. In information retrieval, topic modeling enables efficient search and retrieval of relevant documents based on user queries. In content recommendation, topic modeling helps to identify related articles, products, or services based on users’ preferences. In social media analysis, topic modeling can uncover trending topics, understand public opinion, and detect emerging themes in real-time. In market research, topic modeling aids in customer segmentation, sentiment analysis, and identifying market trends. Overall, topic modeling helps to organize, summarize, and extract valuable insights from large volumes of text data.

This image is property of d33wubrfki0l68.cloudfront.net.

Text Summarization

The purpose of text summarization

Text summarization is the process of creating a concise and coherent summary of a longer text document. The purpose of text summarization is to extract the most important information from a text while preserving its meaning and reducing its length. Text summarization helps in efficient information retrieval, aids in decision-making, and enables quick understanding of the main points in a document. It is particularly useful for handling large volumes of text data, such as news articles, research papers, legal documents, and online product descriptions.

Extractive vs Abstractive Summarization

Text summarization can be classified into two main approaches: extractive and abstractive summarization. Extractive summarization involves selecting sentences or phrases from the original text and arranging them to form a summary. This approach relies on the assumption that the most important information is already present in the text. Abstractive summarization, on the other hand, involves generating new sentences that capture the essence of the original text, potentially using natural language generation techniques. Abstractive summarization requires a deeper understanding of the text and often results in more concise and coherent summaries.

Techniques for text summarization

There are several techniques used for text summarization, ranging from simple frequency-based approaches to advanced machine learning models. Frequency-based approaches involve ranking sentences based on their importance and selecting the top-ranked sentences for the summary. These approaches use techniques such as TF-IDF, sentence extraction based on word frequency or sentence position. Machine learning models such as sequence-to-sequence models, transformer-based models (such as BART or T5), and reinforcement learning can also be used for text summarization. These models are trained on large amounts of data and learn to generate summaries that capture the main points of the original text.

Challenges and Limitations of NLP

Ambiguity and context understanding

One of the major challenges in NLP is dealing with ambiguity and understanding context. Natural language is inherently ambiguous, and there can be multiple interpretations of a given sentence or phrase. Resolving ambiguity and accurately understanding the intended meaning of the text are complex tasks that still pose challenges for NLP systems. Different techniques such as syntactic parsing, semantic role labeling, and coreference resolution are used to tackle these challenges, but achieving complete accuracy in context understanding remains an ongoing research area.

Processing large datasets

Processing large datasets is another challenge in NLP. Text data is often massive in size, and analyzing it can be computationally intensive and time-consuming. Techniques such as parallel processing, distributed computing, and cloud-based solutions help to address these challenges and enable efficient processing of large volumes of text data. However, there are still limitations in terms of scalability and resource requirements, especially when dealing with real-time analysis and streaming data.

Language-specific challenges

Different languages have unique linguistic characteristics and structures, presenting challenges for NLP tasks. Some languages may lack comprehensive linguistic resources or have complex syntax and morphology, making it difficult to develop accurate models. Language-specific challenges include dealing with different writing systems, handling diacritics or accent marks, handling misspellings or informal language, and addressing language-specific ambiguities or idiomatic expressions. Developing language-specific NLP models and resources is crucial for overcoming these challenges and improving the accuracy and effectiveness of NLP systems across different languages.

Ethical implications of NLP

There are several ethical implications associated with NLP. Privacy concerns arise when processing personal or sensitive information from text data. NLP systems must ensure the protection of user data and comply with privacy regulations. Bias and fairness are other ethical concerns, as NLP models can inherit biases present in the training data, leading to biased or unfair results. Efforts must be made to develop unbiased and fair NLP systems by carefully curating training data, testing for biases, and implementing safeguards against discrimination. Lastly, the generation of fake news or malicious content using NLP techniques raises concerns regarding misinformation and manipulation. It is essential to develop mechanisms for detecting and countering such malicious use of NLP technology.

In conclusion, Natural Language Processing (NLP) enables computers to understand and process human language, which has significant implications across various fields. The NLP process involves several steps, including data preprocessing, feature extraction, model building, and evaluation. Techniques such as tokenization, stop word removal, stemming, and lemmatization are used to prepare the text data for analysis. Feature extraction techniques like Bag-of-Words, TF-IDF, word embeddings, and topic modeling help to represent text data. Text classification, sentiment analysis, named entity recognition, topic modeling, and text summarization are important applications of NLP. However, NLP faces challenges such as ambiguity, processing large datasets, language-specific issues, and ethical concerns. Despite these challenges, NLP continues to advance and play a crucial role in understanding and extracting insights from text data.